import matplotlib.pyplot as plt

import numpy as np

#隐藏警告

import warnings

warnings.filterwarnings('ignore')from tensorflow.keras import layers

import tensorflow as tf

gpus = tf.config.list_physical_devices("GPU")if gpus:tf.config.experimental.set_memory_growth(gpus[0], True) #设置GPU显存用量按需使用tf.config.set_visible_devices([gpus[0]],"GPU")# 打印显卡信息,确认GPU可用

print(gpus)data_dir = "./34-data/"

img_height = 224

img_width = 224

batch_size = 32train_ds = tf.keras.preprocessing.image_dataset_from_directory(data_dir,validation_split=0.3,subset="training",seed=12,image_size=(img_height, img_width),batch_size=batch_size)Found 600 files belonging to 2 classes. Using 420 files for training.

val_ds = tf.keras.preprocessing.image_dataset_from_directory(data_dir,validation_split=0.3,subset="validation",seed=12,image_size=(img_height, img_width),batch_size=batch_size)Found 600 files belonging to 2 classes. Using 180 files for validation.

val_batches = tf.data.experimental.cardinality(val_ds)

test_ds = val_ds.take(val_batches // 5)

val_ds = val_ds.skip(val_batches // 5)print('Number of validation batches: %d' % tf.data.experimental.cardinality(val_ds))

print('Number of test batches: %d' % tf.data.experimental.cardinality(test_ds))Number of validation batches: 5 Number of test batches: 1

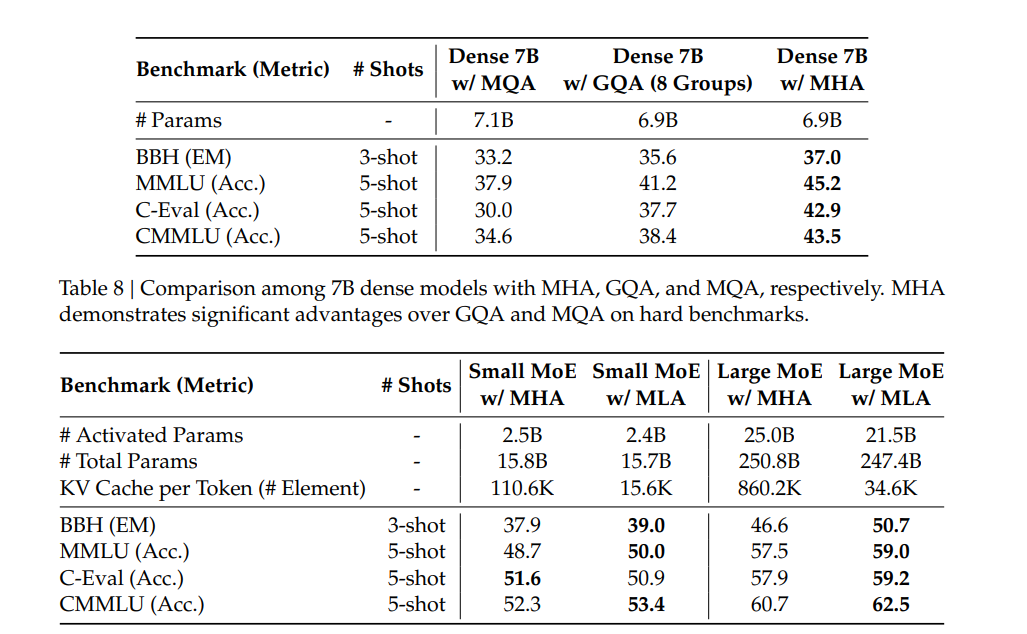

class_names = train_ds.class_names

print(class_names)

['cat', 'dog']

AUTOTUNE = tf.data.AUTOTUNEdef preprocess_image(image,label):return (image/255.0,label)# 归一化处理

train_ds = train_ds.map(preprocess_image, num_parallel_calls=AUTOTUNE)

val_ds = val_ds.map(preprocess_image, num_parallel_calls=AUTOTUNE)

test_ds = test_ds.map(preprocess_image, num_parallel_calls=AUTOTUNE)train_ds = train_ds.cache().prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

plt.figure(figsize=(15, 10)) # 图形的宽为15高为10for images, labels in train_ds.take(1):for i in range(8):ax = plt.subplot(5, 8, i + 1) plt.imshow(images[i])plt.title(class_names[labels[i]])plt.axis("off")

from tensorflow.keras import layers

from tensorflow.keras.models import Sequentialdata_augmentation = Sequential([layers.Rescaling(1./255),layers.RandomFlip("horizontal_and_vertical"),layers.RandomRotation(0.2),

])# Add the image to a batch.

image = tf.expand_dims(images[i], 0)plt.figure(figsize=(8, 8))

for i in range(9):augmented_image = data_augmentation(image)ax = plt.subplot(3, 3, i + 1)plt.imshow(augmented_image[0])plt.axis("off")

model = tf.keras.Sequential([data_augmentation,layers.Conv2D(16, 3, padding='same', activation='relu'),layers.MaxPooling2D(),

])model = tf.keras.Sequential([layers.Conv2D(16, 3, padding='same', activation='relu'),layers.MaxPooling2D(),layers.Conv2D(32, 3, padding='same', activation='relu'),layers.MaxPooling2D(),layers.Conv2D(64, 3, padding='same', activation='relu'),layers.MaxPooling2D(),layers.Flatten(),layers.Dense(128, activation='relu'),layers.Dense(len(class_names))

])model.compile(optimizer='adam',loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),metrics=['accuracy'])epochs=20

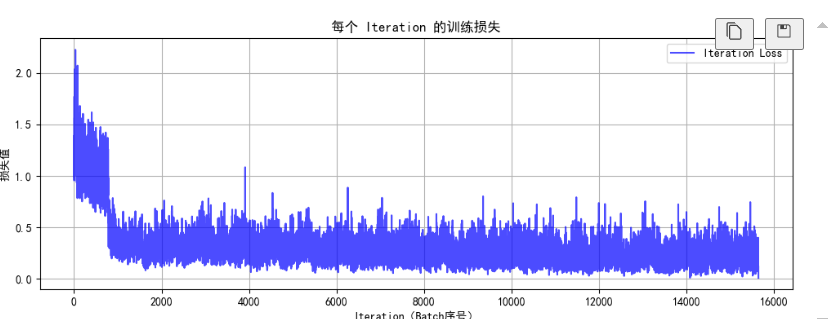

history = model.fit(train_ds,validation_data=val_ds,epochs=epochs

)Epoch 1/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 7s 221ms/step - accuracy: 0.5332 - loss: 1.4747 - val_accuracy: 0.6149 - val_loss: 0.6562 Epoch 2/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 186ms/step - accuracy: 0.7401 - loss: 0.6087 - val_accuracy: 0.8243 - val_loss: 0.4336 Epoch 3/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 189ms/step - accuracy: 0.8792 - loss: 0.3209 - val_accuracy: 0.8311 - val_loss: 0.4042 Epoch 4/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 185ms/step - accuracy: 0.9527 - loss: 0.1470 - val_accuracy: 0.8851 - val_loss: 0.2969 Epoch 5/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 183ms/step - accuracy: 0.9618 - loss: 0.0960 - val_accuracy: 0.8919 - val_loss: 0.2645 Epoch 6/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 177ms/step - accuracy: 0.9918 - loss: 0.0439 - val_accuracy: 0.8919 - val_loss: 0.2542 Epoch 7/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 2s 177ms/step - accuracy: 1.0000 - loss: 0.0131 - val_accuracy: 0.8986 - val_loss: 0.3860 Epoch 8/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 192ms/step - accuracy: 1.0000 - loss: 0.0125 - val_accuracy: 0.8784 - val_loss: 0.6349 Epoch 9/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 187ms/step - accuracy: 1.0000 - loss: 0.0189 - val_accuracy: 0.8446 - val_loss: 0.6983 Epoch 10/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 179ms/step - accuracy: 0.9861 - loss: 0.0564 - val_accuracy: 0.8176 - val_loss: 0.6384 Epoch 11/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 2s 178ms/step - accuracy: 0.9861 - loss: 0.0658 - val_accuracy: 0.8581 - val_loss: 0.6085 Epoch 12/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 184ms/step - accuracy: 0.9987 - loss: 0.0110 - val_accuracy: 0.8716 - val_loss: 0.6948 Epoch 13/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 179ms/step - accuracy: 0.9956 - loss: 0.0157 - val_accuracy: 0.9189 - val_loss: 0.2913 Epoch 14/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 2s 177ms/step - accuracy: 0.9948 - loss: 0.0129 - val_accuracy: 0.8851 - val_loss: 0.3093 Epoch 15/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 2s 176ms/step - accuracy: 0.9982 - loss: 0.0141 - val_accuracy: 0.8716 - val_loss: 0.3558 Epoch 16/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 181ms/step - accuracy: 0.9963 - loss: 0.0087 - val_accuracy: 0.8784 - val_loss: 0.4718 Epoch 17/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 178ms/step - accuracy: 0.9946 - loss: 0.0170 - val_accuracy: 0.8986 - val_loss: 0.3190 Epoch 18/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 185ms/step - accuracy: 1.0000 - loss: 0.0042 - val_accuracy: 0.9257 - val_loss: 0.3658 Epoch 19/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 189ms/step - accuracy: 1.0000 - loss: 8.3204e-04 - val_accuracy: 0.9122 - val_loss: 0.3734 Epoch 20/20 14/14 ━━━━━━━━━━━━━━━━━━━━ 3s 191ms/step - accuracy: 1.0000 - loss: 5.7922e-04 - val_accuracy: 0.9054 - val_loss: 0.3889

loss, acc = model.evaluate(test_ds)

print("Accuracy", acc)1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 135ms/step - accuracy: 0.9062 - loss: 0.3615 Accuracy 0.90625

import random

# 这是大家可以自由发挥的一个地方

def aug_img(image):seed = (random.randint(0,9), 0)# 随机改变图像对比度stateless_random_brightness = tf.image.stateless_random_contrast(image, lower=0.1, upper=1.0, seed=seed)return stateless_random_brightness

image = tf.expand_dims(images[3]*255, 0)

print("Min and max pixel values:", image.numpy().min(), image.numpy().max())

Min and max pixel values: 0.0 255.0

plt.figure(figsize=(8, 8))

for i in range(9):augmented_image = aug_img(image)ax = plt.subplot(3, 3, i + 1)plt.imshow(augmented_image[0].numpy().astype("uint8"))plt.axis("off")

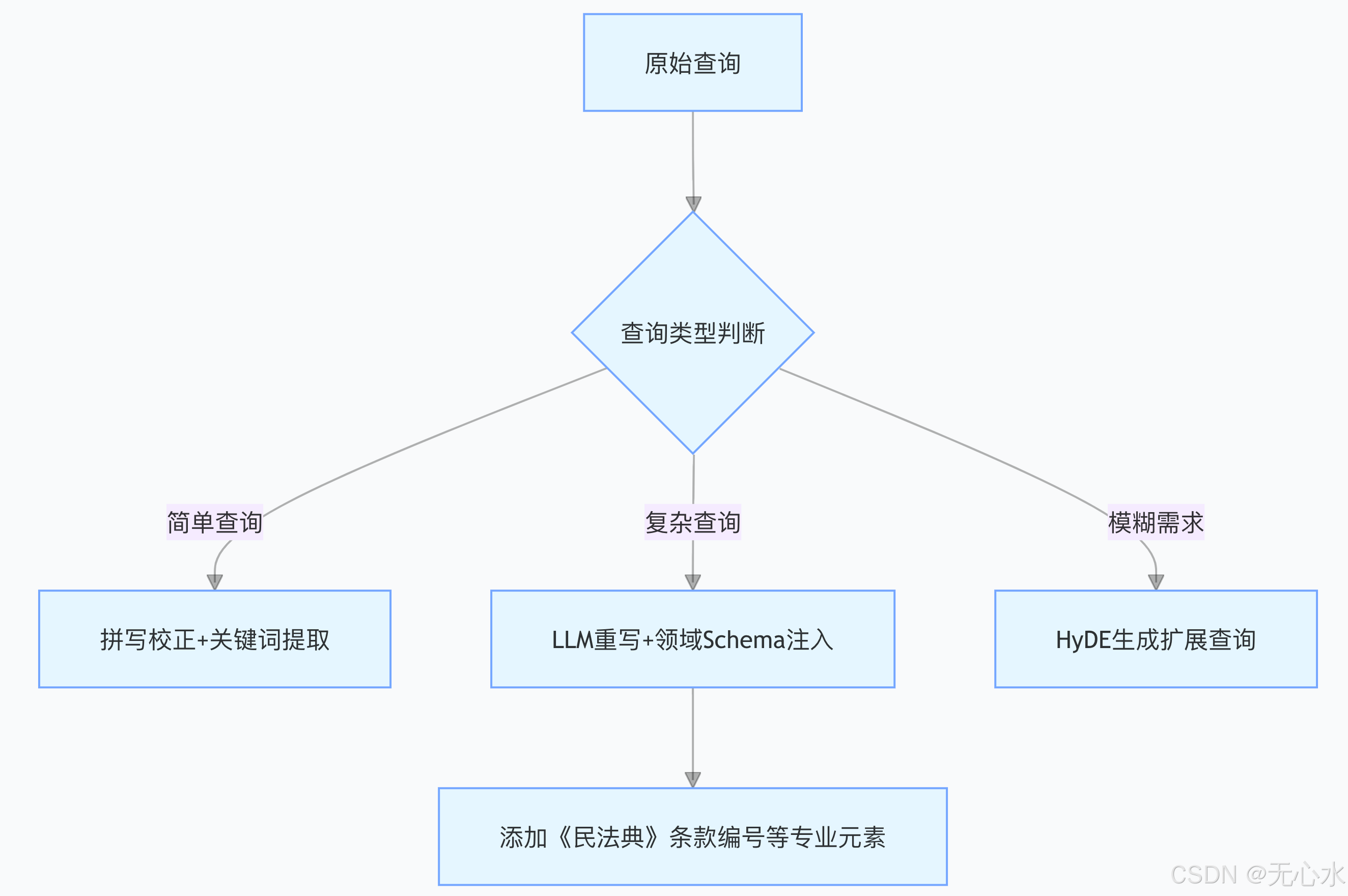

收获:由于tf版本较新 在数据增强处进行代码修改 代码如下:

from tensorflow.keras import layers

from tensorflow.keras.models import Sequential

data_augmentation = Sequential([

layers.Rescaling(1./255),

layers.RandomFlip("horizontal_and_vertical"),

layers.RandomRotation(0.2),

]) 学会了根据所导入库的版本对代码进行修改