摘要

Recent methods for label-free 3D semantic segmentation aim to assist 3D model training by leveraging the openworld recognition ability of pre-trained vision language models. However, these methods usually suffer from inconsistent and noisy pseudo-labels provided by the vision language models. To address this issue, we present a hierarchical intra-modal correlation learning framework that captures visual and geometric correlations in 3D scenes at three levels: intra-set, intra-scene, and inter-scene, to help learn more compact 3D representations. We refine pseudolabels using intra-set correlations within each geometric consistency set and align features of visually and geometrically similar points using intra-scene and inter-scene correlation learning. We also introduce a feedback mechanism to distill the correlation learning capability into the 3D model. Experiments on both indoor and outdoor datasets show the superiority of our method. We achieve a state-of-the-art 36.6% mIoU on the ScanNet dataset, and a 23.0% mIoU on the nuScenes dataset, with improvements of 7.8% mIoU and 2.2% mIoU compared with previous SOTA. We also provide theoretical analysis and qualitative visualization results to discuss the mechanism and conduct thorough ablation studies to support the effectiveness of our framework.

最近,无标签三维语义分割方法旨在利用预训练视觉语言模型的开放世界识别能力来辅助三维模型训练。然而,这些方法通常会受到视觉语言模型提供的非一致且噪声的伪标签的影响。为了解决这个问题,我们提出了一种分层模态内相关学习框架,该框架在三个层次上捕获三维场景中的视觉和几何相关性:集内、场景内和场景间,以帮助学习更紧凑的三维表示。我们使用每个几何一致性集内的集内相关性来细化伪标签,并使用场景内和场景间相关性学习来对视觉和几何上相似的点的特征进行对齐。我们还引入了一种反馈机制,将相关性学习能力提炼到 3D 模型中。在室内和室外数据集上的实验表明了我们方法的优越性。我们在ScanNet数据集上取得了最先进的36.6% mIoU,在nuScenes数据集上取得了23.0% mIoU,与之前的SOTA相比分别提高了7.8% mIoU和2.2% mIoU。我们还提供了理论分析和定性可视化结果来讨论机制,并进行了彻底的消融研究以支持我们框架的有效性。

1. Introduction

1. 引言

Label-free 3D semantic segmentation, which aims to achieve scene understanding without reliance on labeled data, has recently emerged as a vital research topic. This task holds significant value for practical applications, including autonomous driving, robotic navigation, and augmented reality, where the collection of 3D annotations is expensive and novel objects may appear.

无标签 3D 语义分割,旨在不依赖于标记数据的情况下实现场景理解,近年来已成为一项重要的研究课题。这项任务对于自动驾驶、机器人导航和增强现实等实际应用具有重要价值,因为在这些应用中,收集 3D 标注成本高昂,并且可能出现新的物体。

Existing methods [4,5,8,15,19,21,31,36,37,40] leverage the open-world recognition capability of pre-trained vision language models, such as CLIP [22] and MaskCLIP [39],

现有的方法 [4,5,8,15,19,21,31,36,37,40] 利用了预训练视觉语言模型(如 CLIP [22] 和 MaskCLIP [39])的开放世界识别能力。

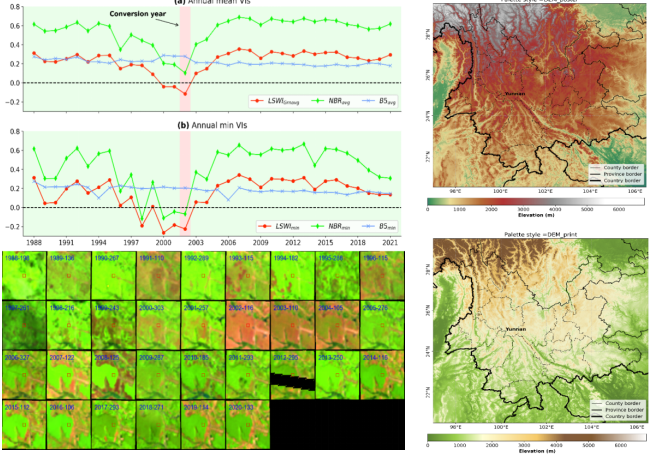

Figure 1. To address the noisy and inconsistent pseudo-label challenge, we design a hierarchical intra-modal correlation learning framework, including intra-set label refinement, intra-scene correlation learning, and inter-scene correlation learning. Intra-set label refinement reduces label inconsistency for points with similar geometric attributes in each local set. For intra-scene correlation learning, we model point correlations in each scene and constrain visually and geometrically similar points to be closer in feature space. For inter-scene correlation learning, we use cross-scene point correlations to help constrain the consistency of feature distribution in different scenes.

图 1. 为了解决噪声和不一致的伪标签挑战,我们设计了一个分层模态内相关学习框架,包括集合内标签细化、场景内相关学习和场景间相关学习。集合内标签细化减少了每个局部集合中具有相似几何属性的点的标签不一致性。对于场景内相关学习,我们对每个场景中的点相关性进行建模,并约束视觉和几何上相似的点在特征空间中更接近。对于场景间相关学习,我们使用跨场景点相关性来帮助约束不同场景中特征分布的一致性。

to train 3D models through cross-modal transfer learning. These vision language models generate semantic features from texts and images to provide semantic guidance for 3D model training. However, pre-trained on image classification tasks, these vision language models struggle to generate consistent dense semantic predictions [4, 39]. For instance, as shown in Fig. 1, the predictions for pixels belonging to the same chair may differ within each view and across multiple views. The predictions for chairs in different scenes may also be inconsistent. Such ambiguous guidance poses significant challenges for the 3D model to learn stable visual representations. Recently, Segment Anything (SAM) [16] has been proposed to pretrain models on dense prediction tasks and can obtain accurate object masks. Leveraging SAM, concurrent work Chen et al. [4] use a label refinement strategy to mitigate noisy supervision in each mask, resulting in significant improvements. However, the use of SAM comes with a great increase in training costs. More importantly, the supervision inconsistency across multiple views and different scenes still exists.

通过跨模态迁移学习训练3D模型。这些视觉语言模型从文本和图像中生成语义特征,为3D模型训练提供语义指导。然而,这些视觉语言模型在图像分类任务上预训练,难以生成一致的密集语义预测 [4, 39]。例如,如图 1 所示,属于同一把椅子的像素的预测结果在每个视角内以及跨多个视角之间可能会有所不同。不同场景中椅子的预测结果也可能不一致。这种模棱两可的指导对 3D 模型学习稳定的视觉表示提出了重大挑战。最近,Segment Anything (SAM) [16] 被提出用于对密集预测任务进行预训练,并且可以获得准确的目标掩码。利用 SAM,陈等人 [4] 采用标签细化策略来缓解每个掩码中的噪声监督,从而取得了显著的改进。然而,使用 SAM 会导致训练成本大幅增加。更重要的是,多视图和不同场景之间的监督不一致仍然存在。

This inconsistency issue is caused by the unstable features learned by the vision language model from images with occlusions and a lack of geometric information. We observe that point cloud data is free of occlusion and rich in geometric clues, thus facilitating the learning of stable point features. This allows us to establish reliable correlations between different points, thus providing strong guidance to help maintain feature compactness under inconsistent supervision. As shown in Fig.1, intra-modal correlations in 3D scenes can be categorized into three aspects: Intraset correlation: Local point sets sharing similar geometric attributes usually can help generate cleaner and sharper segmentation boundaries when compared to the boundaries generated by the vision language model. This can be used to reduce the noise and inconsistency of pseudo-labels and thus improve local feature coherence. Intra-scene correlation: Besides aligning 3D-2D and 3D-text features, aligning the features of objects with similar appearance and geometry during training can assist the 3D model in mitigating disruptions caused by ambiguous supervision from the vision language model. This can help learn a more focused and concise feature space. Inter-scene correlation: Aligning the features of objects with similar appearance and geometry in different scenes can further address the interscene contradictory semantic guidance, leading to consistent feature distributions in various scenes.

这种不一致问题是由视觉语言模型从包含遮挡和缺乏几何信息的图像中学习到的不稳定特征引起的。我们观察到点云数据没有遮挡,并且富含几何线索,从而有利于学习稳定的点特征。这使我们能够建立不同点之间的可靠相关性,从而提供强有力的指导,帮助在不一致的监督下保持特征紧凑性。如图 1 所示,3D 场景中的模态内相关性可以分为三个方面:集合内相关性:具有相似几何属性的局部点集通常可以帮助生成更清晰、更锐利的分割边界,与视觉语言模型生成的边界相比。这可以用来减少伪标签的噪声和不一致性,从而提高局部特征的连贯性。场景内相关性:除了对齐 3D-2D 和 3D-文本特征外,在训练期间对齐具有相似外观和几何形状的物体的特征可以帮助 3D 模型减轻视觉语言模型带来的模糊监督所造成的干扰。这有助于学习更集中和简洁的特征空间。场景间相关性:将不同场景中具有相似外观和几何形状的物体的特征对齐,可以进一步解决场景间矛盾的语义引导,从而在各种场景中实现一致的特征分布。

In this paper, we present a hierarchical intra-modal correlation learning framework to leverage the three aforementioned correlations. (1) We use an intra-set label refinement scheme that statistically analyzes the pseudo-labels within each geometric consistency set and refines the pseudolabels to encourage fewer label conflicts. (2) We propose the intra-scene correlation learning module to capture point feature correlations between different objects and thus constrain visually and geometrically similar points to be closer in feature space. (3) We introduce the inter-scene correlation learning module that leverages cross-scene attention to model correlations among objects in different scenes, promoting the 3D model to learn stable feature distributions. Finally, we design a feedback mechanism that aligns the output features of the 3D model with the final aggregated point features, thereby distilling the correlation learning capability into the 3D model. Experiments on both indoor and outdoor datasets demonstrate the superiority of our method. We achieve a state-of-the-art (SOTA) mIoU of 36.6% on the ScanNet dataset, surpassing the previous SOTA method CLIP2Scene [5] by 7.8% mIoU. On the nuScenes dataset, we achieve 23.0% mIoU and surpass CLIP2Scene by 2.2% mIoU. Theoretical analysis, qualitative visualization, and extensive ablation studies further support the effectiveness of our framework.

本文提出了一种分层模内相关学习框架,以利用上述三种相关性。 (1) 我们使用了一种集内标签细化方案,该方案对每个几何一致性集内的伪标签进行统计分析,并细化伪标签以减少标签冲突。(2) 我们提出了场景内相关性学习模块,以捕获不同物体之间点特征的相关性,从而约束视觉和几何上相似的点在特征空间中更接近。 (3) 我们引入了场景间相关性学习模块,利用跨场景注意力来模拟不同场景中物体之间的相关性,促使 3D 模型学习稳定的特征分布。最后,我们设计了一种反馈机制,将3D模型的输出特征与最终聚合的点特征对齐,从而将相关性学习能力提炼到3D模型中。在室内和室外数据集上的实验表明了我们方法的优越性。我们在ScanNet数据集上取得了36.6%的最佳mIoU,比之前的最佳方法CLIP2Scene [5]高出7.8% mIoU。在nuScenes数据集上,我们取得了23.0%的mIoU,比CLIP2Scene高出2.2% mIoU。理论分析、定性可视化和广泛的消融研究进一步支持了我们框架的有效性。

The key contributions can be summarized as follows.

• We propose a novel hierarchical intra-modal correlation learning framework for label-free 3D semantic segmentation that leverages intra-modal correlations at three levels: intra-set, intra-scene, and inter-scene, to capture visual and geometric correlations hierarchically and thus assist in learning compact 3D features.

• 我们提出了一种新颖的分层模态内相关学习框架,用于无标签 3D 语义分割,该框架利用三种级别的模态内相关性:集内、场景内和场景间,以分层方式捕获视觉和几何相关性,从而帮助学习紧凑的 3D 特征。

• We present a comprehensive theoretical analysis, qualitative visualization, extensive ablation studies, and a thorough discussion of our framework’s mechanism.

• 我们提供了一个全面的理论分析、定性可视化、广泛的消融研究以及对我们框架机制的深入讨论。

• Our method achieves promising results on both indoor and outdoor datasets, showing significant improvement over previous SOTA methods.

• 我们的方法在室内和室外数据集上都取得了有希望的结果,与之前的 SOTA 方法相比有了显著的改进。

2. Related Work

2. 相关工作

2.1. Label-free 3D Semantic Segmentation

2.1. 无标签三维语义分割

Label-free 3D semantic segmentation, with the goal of achieving scene understanding independent of labeled data, has attracted significant attention in recent years. Existing works [4, 5, 8, 15, 18, 19, 21, 23, 31, 32, 36, 37, 40] mainly utilize powerful pre-trained vision language models [16, 22, 39] to extract semantic knowledge from image and text modalities and distill these semantic knowledge into a 3D model through cross-modal transfer learning. Specifically, these methods first construct dense correspondences between 3D points, 2D images, and text descriptions, and then optimize the similarities between point features and text embeddings according to 2D pseudo-labels. However, since vision language models are typically pretrained on image classification tasks and lack dense prediction constraints, the pseudo-labels generated from dense visual features are usually noisy and inconsistent. This results in ambiguous semantic guidance for the 3D model training and hinders its performance. To address this issue, concurrent work by Chen et al. [4] use segmentation masks from Segment Anything [16] to reduce the noise in 2D pseudolabels and improve the 3D model’s performance. Nonetheless, SAM brings considerable costs in the training process. A comparison of training costs is provided in Section 5 of the supplementary material. Besides, the inconsistency of pseudo-labels still exists in different views in each scene and across different scenes and impedes the 3D model to learn stable visual representations. In this work, we design a hierarchical intra-modal correlation learning framework that models visual and geometric correlations between points in each local neighborhood, in each scene, and across different scenes. Based on the learned multi-scale correlations, we draw the deep features of points with high correlations closer, leading to a concise 3D feature space with fewer conflicts.

近年来,无标签 3D 语义分割,旨在实现独立于标记数据的场景理解,引起了广泛关注。现有工作 [4, 5, 8, 15, 18, 19, 21, 23, 31, 32, 36, 37, 40] 主要利用强大的预训练视觉语言模型 [16, 22, 39] 从图像和文本模态中提取语义知识,并通过跨模态迁移学习将这些语义知识提炼到 3D 模型中。具体来说,这些方法首先构建了3D点、2D图像和文本描述之间的密集对应关系,然后根据2D伪标签优化点特征和文本嵌入之间的相似性。然而,由于视觉语言模型通常在图像分类任务上进行预训练,并且缺乏密集预测约束,因此从密集视觉特征生成的伪标签通常是嘈杂且不一致的。这导致了 3D 模型训练的语义指导模棱两可,并阻碍了其性能。为了解决这个问题,Chen 等人 [4] 的同时工作使用来自 Segment Anything [16] 的分割掩码来减少 2D 伪标签中的噪声,并提高 3D 模型的性能。然而,SAM 在训练过程中带来了相当大的成本。补充材料第 5 节提供了训练成本的比较。此外,伪标签在每个场景的不同视图以及跨不同场景之间仍然存在不一致性,阻碍了 3D 模型学习稳定的视觉表示。在这项工作中,我们设计了一个分层模态内相关学习框架,该框架对每个局部邻域、每个场景以及跨不同场景中的点之间的视觉和几何相关性进行建模。基于学习到的多尺度相关性,我们将具有高相关性的点的深层特征拉近,从而形成一个更简洁、冲突更少的3D特征空间。

2.2. Scene Context Learning

2.2. 场景语境学习

Scene context learning utilizes semantic and spatial correlations among visual elements to facilitate various sceneunderstanding tasks, such as semantic segmentation and object detection. Existing methods can be mainly categorized into three directions: convolutional-based, attentionbased, and graph-based methods. Convolutional-based methods aim to design various sparse convolutional kernels [6, 12–14, 27] to progressively integrate multi-scale point features and help the 3D model capture both local details and global context. Another research line focuses on attention mechanisms [9, 10, 17, 26, 29, 35, 38] to model semantic and spatial correlations between different points, enabling the 3D model to learn robust contextual point representations. Graph-based methods [1, 25, 28, 30, 33, 34] treat scenes as graphs and utilize graph convolutional networks to propagate information between different visual items. By leveraging the inherent structure of the data, these methods effectively capture the visual correlations within each scene. In this work, we propose using flexible and adaptive attention mechanisms on point clouds to capture visual correlations in 3D scenes for label-free 3D semantic segmentation.

场景上下文学习利用视觉元素之间的语义和空间关联来促进各种场景理解任务,例如语义分割和目标检测。现有的方法主要可以分为三个方向:基于卷积、基于注意力和基于图的方法。基于卷积的方法旨在设计各种稀疏卷积核[6, 12–14, 27],以逐步整合多尺度点特征,并帮助 3D 模型捕捉局部细节和全局上下文。另一条研究方向侧重于注意力机制 [9, 10, 17, 26, 29, 35, 38] 来建模不同点之间的语义和空间相关性,使3D模型能够学习鲁棒的上下文点表示。基于图的方法 [1, 25, 28, 30, 33, 34] 将场景视为图,并利用图卷积网络在不同的视觉项目之间传播信息。通过利用数据的内在结构,这些方法有效地捕获了每个场景中的视觉相关性。在这项工作中,我们提出在点云上使用灵活的自适应注意力机制来捕获 3D 场景中的视觉相关性,以实现无标签的 3D 语义分割。

3. Method

3. 方法

Following the cross-modal transfer learning framework in CLIP2Scene [5], we optimize a 3D model using 2D pseudo-labels generated by MaskCLIP [5, 39]. In Sec. 3.1, we formulate the cross-modal transfer learning framework, while in Sec. 3.2, we present our hierarchical scene correlation learning framework that leverages visual and geometric correlations to build a compact feature space. We also introduce a feedback distillation module in Sec. 3.3 to incorporate the correlation learning ability into the 3D model. The overall training objective is explained in Sec. 3.4.

遵循 CLIP2Scene [5] 中的跨模态迁移学习框架,我们使用 MaskCLIP [5, 39] 生成的二维伪标签优化三维模型。在第 3.1 节中,我们制定了跨模态迁移学习框架,而在第 3.2 节中,我们提出了我们的分层场景相关性学习框架,该框架利用视觉和几何相关性来构建一个紧凑的特征空间。我们还在第 3.3 节中介绍了反馈蒸馏模块,将相关性学习能力融入 3D 模型。整体训练目标在第 3.4 节中解释。

3.1. Cross-modal Transfer Learning

3.1. 跨模态迁移学习

Given a scene S, its projected image I under certain camera position, and class prompts {Tc}c∈[1,C], the pretrained vision language model maps each image pixel Iij to feature space fijI ∈ Rd, and each class prompt Tc to text embedding Tˆc ∈ Rd, where C is the number of categories. Subsequently, with given similarity measurement ψ, the pixel-wise pseudo label can be defined as lij = arg maxc ψ(fijI , Tˆc). In the meanwhile, a point cloud P can be sampled from the scene and be processed with a 3D encoder mapping each point pm to fmP ∈ Rd. Then we project point pm back to the image plane of I filtered by depth and transfer the pseudo label of Iij to pm, resulting in (fmP , Tˆlij ), which we call a pair. For simplicity of expression, we denote fm as the m-th point feature, and lm as the m-th paired pseudo label. With the variation of S under different projection camera positions, we can collect a set of such pairs denoted as M. In the end, a cross-entropy loss is used for cross-modal feature alignment as follows,

给定一个场景 S,其在特定相机位置下的投影图像 I,以及类别提示 {Tc}c∈[1,C],预训练的视觉语言模型将每个图像像素 Iij映射到特征空间 fijI ∈ Rd,并将每个类别提示 Tc映射到文本嵌入 Tˆc ∈ Rd,其中 C 是类别数量。随后,给定相似度度量 ψ,像素级伪标签可以定义为 lij = arg maxc ψ(fijI , Tˆc)。同时,可以从场景中采样点云 P,并使用 3D 编码器处理,将每个点 pm 映射到 fmP ∈ Rd。然后我们将点 pm 投影回 I 的图像平面,并根据深度进行过滤,并将 Iij 的伪标签转移到 pm,得到 (fmP , Tˆlij ),我们称之为一对。为简化表达,我们将 fm 表示为第 m 个点特征,将 lm 表示为第 m 个配对的伪标签。随着 S 在不同投影相机位置下的变化,我们可以收集一组这样的对,表示为 M。最后,使用交叉熵损失进行跨模态特征对齐,如下所示:

L

ce

=

−

X

M

log

exp(

ψ

(

f

m

,

ˆ

T

l

m

))

P

c

exp(

ψ

(

f

m

,

ˆ

T

c

))

.

(1)

Denote hm,c = ψ(fm, Tˆc) and ym,lm = exp(c exp(hm,lhm,cm)) . The gradient of m-th item of loss function with respect to hm,c can be expressed as

记 hm,c = ψ(fm, Tˆc) 和 ym,lm = exp(c exp(hm,lhm,cm)) 。损失函数中第 m 个元素关于 hm,c 的梯度可以表示为

∂

L

m

∂

h

m,c

=

(

y

m,c

−

1

,

c

=

l

m

;

y

m,c

,

c

=

l

m

.

(2)

During the optimization process, this gradient forces hm,lm to increase and hm,c (c = lm) to decrease, driving fm towards Tˆlm and away from Tˆc (c = lm). This results in an alignment between the point features and text embeddings.

在优化过程中,该梯度迫使hm,lm增加,hm,c (c = lm)减少,从而将fm推向Tˆlm,并远离Tˆc (c = lm)。这导致点特征和文本嵌入之间的对齐。

Following CLIP2Scene [5], we use MaskCLIP [39] as the pre-trained vision language model and an inner product function ψ = ⟨·, ·⟩ as the similarity measurement. During training, the pre-trained text embeddings remain fixed, serving as feature anchors for different categories. The loss function Lce optimizes the 3D model by pulling point features towards corresponding text embeddings according to the 2D pseudo-labels. However, due to the inherent noise and inconsistency in pseudo-labels, point features within the same category may be directed towards different text embeddings, resulting in a confusing 3D feature space. To address this, we propose a hierarchical intra-modal correlation learning framework that captures visual and geometric correlations in 3D scenes hierarchically and helps learn more consistent point representations in various environments.

继 CLIP2Scene [5] 之后,我们使用 MaskCLIP [39] 作为预训练的视觉语言模型,并使用内积函数 ψ = ⟨·, ·⟩ 作为相似度度量。在训练过程中,预训练的文本嵌入保持固定,作为不同类别的特征锚点。损失函数 Lce 通过根据二维伪标签将点特征拉向相应的文本嵌入来优化三维模型。然而,由于伪标签中固有的噪声和不一致性,同一类别的点特征可能会被指向不同的文本嵌入,从而导致三维特征空间混乱。为了解决这个问题,我们提出了一种分层模态内相关学习框架,该框架分层地捕获 3D 场景中的视觉和几何相关性,并有助于学习在各种环境中更一致的点表示。

3.2. Hierarchical Scene Correlation Learning

3.2. 层次场景相关性学习

To leverage intra-modal correlations for learning consistent visual features, we design a hierarchical scene correlation learning framework, as shown in Fig. 2, containing three parts, intra-set label refinement, intra-scene correlation learning, and inter-scene correlation learning.

为了利用模态内相关性学习一致的视觉特征,我们设计了一个分层场景相关性学习框架,如图2所示,包含三个部分:集内标签细化、场景内相关性学习和场景间相关性学习。

Intra-set label refinement. Pre-trained on image classification tasks, CLIP potentially produces noisy dense predictions and thus hinders the model’s performance. Concurrent work Chen et al. [4] address this inconsistency issue by employing the Segment Anything model [16]. However, it introduces considerable additional training costs. In this work, we choose a more efficient way for label refinement. Our key insight is that geometric clues like normal smoothness can help identify the same semantics for points in the same object part. Specifically, following Chen et al. [3], with a given equivalence relation ∼ between points in all the scenes ∪Si, we can obtain the quotient set {Gk} = ∪Si/ ∼, denoted as geometric consistency sets. Then we project points within each set Gk to the image plane of I and denote the projected set as Gˆk. In each projected set Gˆk, we use a voting mechanism formulated as ˆlij = Mode({lij}Iij∈Gˆk ), and replace the original pseudo-labels with this most frequent label, resulting in refined pseudo-labels {ˆlij}. In this work, we generate geometric consistency sets using a normal-based over-segmentation algorithm [11, 20]. Other complex equivalences, such as topological, semantic, and functional equivalences, can also be used to generate point sets. We show the refined pseudo-labels for the point cloud in Fig. 3. Through intra-set label refinement, we can efficiently reduce the noise in pseudo-labels and provide locally consistent supervision during training.

集内标签细化。CLIP 在图像分类任务上预训练,可能会产生噪声密集预测,从而阻碍模型性能。同时期的工作 Chen 等人 [4] 通过使用 Segment Anything 模型 [16] 解决了这一不一致问题。然而,它引入了相当大的额外训练成本。在这项工作中,我们选择了一种更有效的方式进行标签细化。我们的关键见解是,几何线索(如法线平滑度)可以帮助识别同一物体部分中点的相同语义。具体来说,遵循 Chen 等人 [3] 的方法,给定所有场景 ∪Si 中点之间的等价关系 ∼,我们可以得到商集 {Gk} = ∪Si/ ∼,称为几何一致性集。然后我们将每个集合 Gk 中的点投影到 I 的图像平面上,并将投影后的集合表示为 Gˆk。在每个投影集 Gˆk 中,我们使用一个投票机制,其公式为 ˆlij = Mode({lij}Iij∈Gˆk ),并将原始伪标签替换为最频繁的标签,从而得到细化的伪标签 {ˆlij}。在这项工作中,我们使用基于法线的过度分割算法 [11, 20] 生成几何一致性集。其他复杂的等价关系,例如拓扑、语义和功能等价关系,也可以用于生成点集。我们在图 3 中展示了点云的细化伪标签。通过集合内标签细化,我们可以有效地减少伪标签中的噪声,并在训练期间提供局部一致的监督。

Figure 2. Illustration of the hierarchical intra-modal correlation learning framework. First, we use a CLIP model and a 3D model to generate pixel-wise pseudo-labels and point features. Then we perform intra-modal correlation learning hierarchically. For intra-set label refinement, we cluster points into geometric consistency sets and adopt a voting mechanism to refine pseudo-labels in each set. We extract intra-scene correlation by sampling points in each set and processing them via a vision transformer. For inter-scene correlation learning, we use a cross-scene vision transformer that processes points from multiple scenes. Finally, we align the output features of the 3D model with the final aggregated point features. Note that only the 3D model is retained for inference.

图 2. 层次化模态内相关学习框架示意图。首先,我们使用 CLIP 模型和 3D 模型生成像素级伪标签和点特征。然后,我们分层进行模态内相关学习。对于集内标签细化,我们将点聚集成几何一致性集,并采用投票机制来细化每个集中的伪标签。我们通过对每个集中的点进行采样并通过视觉转换器对其进行处理来提取场景内相关性。对于场景间相关性学习,我们使用跨场景视觉转换器来处理来自多个场景的点。最后,我们将 3D 模型的输出特征与最终聚合的点特征对齐。注意,仅保留 3D 模型用于推理。

Intra-scene correlation learning. Previous cross-modal transfer learning methods typically optimize the 3D model by aligning point features with text embeddings according to 2D pseudo-labels, as formulated in Eq. 1. Given that the 2D pseudo-labels tend to be noisy and inconsistent, the resulting 3D feature space is often ambiguous. To this end, we propose to investigate intra-scene correlations to help constrain features of points with strong correlations to be closer and construct a more focused feature space. Initially, we sample a set of points {pi}k in each geometry consistency set Gk and concatenate all the sampled points to formulate a subset of the input point cloud, noted as P S. The number of sampled points Mi is determined by the ratio of the number of points Ni in each set to the total number of points N , i.e., Mi = M × Ni/N . In our experiments, we limit the total number of sampling points to M = 1024 to manage GPU memory consumption.

场景内相关性学习。先前跨模态迁移学习方法通常通过根据二维伪标签对齐点特征和文本嵌入来优化三维模型,如公式1所示。鉴于二维伪标签往往存在噪声和不一致性,导致的三维特征空间通常存在歧义。为此,我们建议研究场景内部的相关性,以帮助约束具有强相关性的点的特征,使其更接近,并构建一个更集中的特征空间。最初,我们在每个几何一致性集 Gk 中采样一组点 {pi}k,并将所有采样点连接起来,形成输入点云的一个子集,记为 P S。采样点的数量 Mi 由每个集合中的点数 Ni 与总点数 N 的比率决定,即 Mi = M × Ni/N。在我们的实验中,为了控制 GPU 内存消耗,我们将采样点的总数限制为 M = 1024。

The sampled point features in i-th scene, {fm}pm∈PiS , are processed through a transformer block. The m-th output feature of the transformer block can be formulated as

第 i 个场景中采样的点特征,{fm}pm∈PiS,经过一个 Transformer 模块处理。Transformer 模块的第 m 个输出特征可以表示为:

˜

f

m

=

f

m

+

X

n

w

mn

v

n

,

ˆ

f

m

=

˜

f

m

+

Linear(

˜

f

m

)

=

(

W

+

I

)(

f

m

+

X

n

w

mn

v

n

)

+

b

=

f

′

m

+

X

n

w

mn

v

′

n

+

b

,

(3)

where wmn denotes the attention weight between the m-th and n-th visual features, vn is the n-th value vector, which is derived by passing fn through a linear projection layer and W ∈ Rd×d and b ∈ Rd are the weight matrix and the bias vector of the linear layer. Since b is unrelated to the gradient for other items, it can be omitted from the loss function. The cross-entropy loss function is formulated as,

其中 wmn 表示第 m 个和第 n 个视觉特征之间的注意力权重,vn 是第 n 个值向量,它是通过将 fn 传递到一个线性投影层得到的,W ∈ Rd×d 和 b ∈ Rd 是线性层的权重矩阵和偏置向量。由于 b 与其他项目的梯度无关,因此可以从损失函数中省略。交叉熵损失函数的公式如下:

ˆ

L

ce

=

−

X

M

log

exp(

ψ

(

f

′

m

+

P

n

w

mn

v

′

n

,

ˆ

T

ˆ

l

m

))

P

c

exp(

ψ

(

f

′

m

+

P

n

w

mn

v

′

n

,

ˆ

T

c

))

,

(4)

where ˆlm represents the refined pseudo label for point pm.

其中 ˆlm 代表点 pm 的精炼伪标签。

Denote hm,c = ψ(fm′ , Tˆc) and en,c = ψ(vn′ , Tˆc). We get ym,lm = exp(hm,lm+ n wmnen,lm)) c exp(hm,c+ n wmnen,c)) . The gradient of m-th item of the loss function with respect to hm,c is the same as Eq. 2. The gradient for en,c can be formulated as

记 hm,c = ψ(fm′ , Tˆc) 和 en,c = ψ(vn′ , Tˆc)。我们get ym,lm = exp(hm,lm+ n wmnen,lm))c exp(hm,c+ n wmnen,c))。损失函数中第 m 个元素关于 hm,c 的梯度与公式 2 相同。en,c 的梯度可以表示为

∂

ˆ

L

m

∂

e

n,c

=

(

w

mn

·

(

y

m,c

−

1)

,

c

=

ˆ

l

m

,

w

mn

·

y

m,c

,

c

=

ˆ

l

m

.

(5)

The gradient for wmn can be formulated as

wmn 的梯度可以表示为

∂

ˆ

L

m

∂

w

mn

=

(

e

n,c

·

(

y

m,c

−

1)

,

c

=

ˆ

l

m

,

e

n,c

·

y

m,c

,

c

=

ˆ

l

m

.

(6)

During the optimization process, the gradient in Eq. 5 forces en,ˆlm to increase and en,c (c = ˆlm) to decrease if wmn > 0, denoting that points with higher correlations should move toward the same text embedding. The degree of this constraint is determined by the value of wmn. The gradient in Eq. 6 forces wmn to increase if en,ˆlm > 0 and decrease if en,c > 0 (c = ˆlm). This ensures that point features are positioned closer to each other if they surround the same text embedding and farther from each other if they surround different text embeddings.

在优化过程中,公式 5 中的梯度迫使 en,ˆlm 增加,en,c (c = ˆlm) 减少,如果 wmn > 0,这表明具有更高相关性的点应该向相同的文本嵌入移动。这种约束的程度由 wmn 的值决定。公式 6 中的梯度迫使 wmn 在 en,ˆlm > 0 时增加,而在 en,c > 0 (c = ˆlm) 时减少。这确保了如果点特征围绕相同的文本嵌入,则它们彼此更靠近;如果它们围绕不同的文本嵌入,则它们彼此更远。

The loss function in Eq. 1 pulls each point feature towards its corresponding text embedding based on pseudolabels. This can be misleading when pseudo-labels are inconsistent, resulting in a scattered feature distribution. Through our intra-scene correlation learning, the loss function in Eq. 4 further constrains that, (a) points with stronger correlations should be close to the same text embedding, and (b) point features within the same category should be closer together, assisting in the construction of a more focused and concise feature space.

等式1中的损失函数根据伪标签将每个点特征拉向其对应的文本嵌入。当伪标签不一致时,这可能会产生误导,导致特征分布分散。通过我们的场景内相关性学习,等式4中的损失函数进一步约束了:(a) 相关性更强的点应该更接近相同的文本嵌入,以及 (b) 同一类别内的点特征应该更靠近彼此,这有助于构建更集中和简洁的特征空间。

Inter-scene correlation learning. To solve the issue of inconsistent pseudo labels across different scenes, we propose an inter-scene attention mechanism to learn feature correlations among objects in different scenes. Specifically, we first include point features from multiple scenes in a training batch as {fm}pm∈∪PiS . Then we process the batched features through a transformer block as

场景间相关性学习。为了解决不同场景中伪标签不一致的问题,我们提出了一种场景间注意力机制来学习不同场景中物体之间的特征相关性。具体来说,我们首先将来自多个场景的点特征包含在一个训练批次中,作为{fm}pm∈∪PiS。然后,我们将批处理特征通过一个 Transformer 块处理,如 。

{

ˆ

f

m

}

p

m

∈∪

P

S

i

=

T

ransformer(

{

f

m

}

p

m

∈∪

P

S

i

)

,

(7)

where the Transformer(·) function works the same as Eq. 3. By integrating inter-scene attention weights {wmn} between {fm}pm∈PiS and {fn}pn∈PjS into {fˆm}pm∈∪PiS , the gradient formulated in Eq. 5 and 6 promotes the compactness of visual features in different scenes during training, leading to a stable and consistent feature distribution. The visualization of intra- and inter-scene attention weights can be found in Section 4 of the supplementary material.

其中 Transformer(·) 函数与公式 3 中相同。通过将场景间注意力权重 {wmn} 整合到 {fˆm}pm∈∪PiS 中,公式 5 和 6 中的梯度在训练期间促进了不同场景中视觉特征的紧凑性,从而导致稳定且一致的特征分布。补充材料的第 4 节中可以找到场景内和场景间注意力权重的可视化结果。

Our theoretical analysis suggests that integrating correlations within the optimization process can effectively impose further constraints on feature compactness among points of high relevance, leading to a more compact feature space with less ambiguity. This enables the 3D model to learn robust features under noisy pseudo-labels.

我们的理论分析表明,将相关性整合到优化过程中可以有效地对高相关性点之间的特征紧凑性施加进一步的约束,从而导致更紧凑的特征空间,并减少歧义。这使得 3D 模型能够在噪声伪标签下学习鲁棒特征。

Figure 3. Visualization of intra-set label refinement. By replacing the original pseudo-labels with the most frequent labels in each geometric consistency set, we can effectively reduce the noise.

图 3. 集内标签细化的可视化。通过用每个几何一致性集中最频繁的标签替换原始伪标签,我们可以有效地减少噪声。

3.3. Feedback Distillation

3.3. 反馈蒸馏

To distill the correlation learning capability of our hierarchical framework to the 3D model, we align the output features of the 3D model with the final aggregated point features through a widely used Kullback-Leibler (KL) distance loss, which can be formulated as

为了将我们分层框架的相关性学习能力提炼到3D模型中,我们将3D模型的输出特征与最终聚合的点特征通过广泛使用的Kullback-Leibler (KL) 距离损失进行对齐,该损失可以表示为:

L

k

l

=

M

X

m

=1

KL(

f

m

,

ˆ

f

m

)

,

(8)

where fˆm is m-th aggregated feature and KL(, ) is the KL distance that measures the distribution difference between two input vectors. This feedback strategy enables the 3D model to integrate the ability to capture visual correlations, thereby generating more robust semantic predictions.

其中 fˆm 是第 m 个聚合特征,KL(, ) 是 KL 距离,用于衡量两个输入向量之间的分布差异。这种反馈策略使 3D 模型能够整合捕捉视觉相关性的能力,从而生成更鲁棒的语义预测。

3.4. Overall Objective

3.4. 总体目标

Our training phase is segmented into three parts: the first 10 epochs involve training the 3D model with Lce. Starting at epoch 11, we concurrently train the visual transformer by introducing Lˆce, and post-epoch 20, we incorporate Lkl for feedback distillation. We use the average of the losses at each stage of the training and the entire training process is seamless. During the inference stage, we only retain the 3D model for semantic segmentation.

我们的训练阶段分为三个部分:前 10 个 epoch 使用 Lce 训练 3D 模型。从第 11 个 epoch 开始,我们通过引入 Lˆce 同时训练视觉 Transformer,并在第 20 个 epoch 之后,我们加入 Lkl 用于反馈蒸馏。我们在训练的每个阶段使用损失的平均值,整个训练过程是无缝的。在推理阶段,我们只保留用于语义分割的 3D 模型。

4. Experiment

4. 实验

4.1. Experiment Setup

4.1. 实验装置

Datasets and metrics. We conduct experiments on both an indoor dataset ScanNet [7] and an outdoor dataset nuScenes [2]. ScanNet includes 1,603 scanned indoor scenes, where 1,201 scans are for training, 312 scans are for validation, and 100 scans are for testing. The nuScenes dataset contains 1,000 scenes, where 700 scenes are for training, 150 scenes are for evaluation, and 150 scenes are for testing. We use the mean Intersection of Union (mIoU) metric to evaluate the semantic segmentation performance, the training GPU hour to measure the training cost, and the number of model parameters to evaluate the model size.

数据集和指标。我们在室内数据集 ScanNet [7] 和室外数据集 nuScenes [2] 上进行实验。ScanNet 包含 1,603 个扫描的室内场景,其中 1,201 个扫描用于训练,312 个扫描用于验证,100 个扫描用于测试。nuScenes 数据集包含 1,000 个场景,其中 700 个场景用于训练,150 个场景用于评估,150 个场景用于测试。我们使用平均交并比 (mIoU) 指标来评估语义分割性能,使用训练 GPU 小时来衡量训练成本,以及使用模型参数数量来评估模型大小。

Table 1. Comparison with previous SOTA label-free 3D semantic segmentation methods on ScanNet and nuScenes datasets. The training time is measured on the ScanNet dataset. † indicates concurrent work.

表 1. 与 ScanNet 和 nuScenes 数据集上先前无标签 3D 语义分割方法的比较。训练时间是在 ScanNet 数据集上测量的。† 表示同时进行的工作。

Implementation details. We follow CLIP2Scene [5] to use MinkowskiNet14 [6] and SPVCNN [24] as the 3D models for ScanNet and nuScenes, respectively. The image features and text embeddings are generated with MaskCLIP [39], which is fixed during training. To extract intra-scene and inter-scene correlations, we utilize two three-layer vision transformers [9] in our experiments: one for intra-scene correlation learning and the other for inter-scene correlation learning. For the 3D model, we use SGD as the optimizer, while AdamW is employed for the vision transformer. We adopt the cosine learning rate decay strategy, setting the initial learning rate at 0.2 for the 3D model and 2e-4 for the vision transformer. Our framework is developed on PyTorch and trained on four NVIDIA Tesla V100 GPUs. For ScanNet, we train our model for 120 epochs over 3.5 hours. The batch size used is 8. For nuScenes, we train our model for 30 epochs over 12.5 hours, with a batch size of 8.

实现细节。我们遵循 CLIP2Scene [5],使用 MinkowskiNet14 [6] 和 SPVCNN [24] 作为 ScanNet 和 nuScenes 的 3D 模型。图像特征和文本嵌入由 MaskCLIP [39] 生成,在训练过程中保持固定。为了提取场景内和场景间相关性,我们在实验中使用了两个三层视觉Transformer [9]:一个用于场景内相关性学习,另一个用于场景间相关性学习。对于 3D 模型,我们使用 SGD 作为优化器,而 AdamW 用于视觉 Transformer。我们采用余弦学习率衰减策略,将 3D 模型的初始学习率设置为 0.2,视觉 Transformer 的初始学习率设置为 2e-4。我们的框架基于 PyTorch 开发,并在四块 NVIDIA Tesla V100 GPU 上训练。对于 ScanNet,我们在 3.5 小时内对模型进行了 120 个 epoch 的训练。使用的批次大小为 8。对于 nuScenes,我们在 12.5 小时内对模型进行了 30 个 epoch 的训练,批次大小为 8。

4.2. Comparison Methods

4.2. 比较方法

We compare our method with state-of-the-art methods, including MaskCLIP [39], MaskCLIP+ [39], OpenScene [21], CLIP2Scene [5], and Chen et al. [4], on the ScanNet and nuScenes datasets.

我们将我们的方法与最先进的方法进行比较,包括 MaskCLIP [39]、MaskCLIP+ [39]、OpenScene [21]、CLIP2Scene [5] 和 Chen 等人 [4],在 ScanNet 和 nuScenes 数据集上进行评估。

MaskCLIP & MaskCLIP+ [39] generate segmentation results through projecting 2D pixel-wise predictions to 3D points according to camera parameters and depth maps. The 2D predictions are derived by evaluating the similarities between the CLIP visual features and text embeddings.

MaskCLIP 和 MaskCLIP+ [39] 通过根据相机参数和深度图将二维像素级预测投影到三维点来生成分割结果。二维预测是通过评估 CLIP 视觉特征和文本嵌入之间的相似性得到的。

OpenScene [21] combines multi-view image features with point features and evaluates their similarity with CLIP text embeddings to make predictions. For a fair comparison, the reported results are produced by using the same image encoder as used in MaskCLIP.

OpenScene [21] 将多视图图像特征与点特征结合,并使用 CLIP 文本嵌入评估其相似性以进行预测。为了公平比较,报告的结果使用与 MaskCLIP 中相同的图像编码器生成。

CLIP2Scene [5] achieves 3D segmentation by utilizing semantic consistency regularization and spatial-temporal consistency regularization to align point features with corresponding text embeddings and concentrating point features in each spatial-temporal interval.

CLIP2Scene [5] 通过利用语义一致性正则化和时空一致性正则化来对齐点特征与相应的文本嵌入,并将每个时空间隔内的点特征集中,从而实现 3D 分割。

Chen et al. [4] adopt SAM’s [16] segmentation masks to refine noisy pseudo-labels from MaskCLIP, thereby improving the 3D model’s performance.

Chen 等人 [4] 采用 SAM 的 [16] 分割掩码来细化来自 MaskCLIP 的噪声伪标签,从而提高了 3D 模型的性能。

Our approach hierarchically utilizes intra-modal correlations to promote the compactness between point features and thereby reduces prediction ambiguity. Our principal innovation capitalizes on intra-modal feature alignment and does not conflict with the concurrent work of Chen et al. [4], which leverages cross-modal feature alignment to learn robust point features from refined pseudo-labels. Finally, we achieve state-of-the-art results without additional priors, like the feature fusion strategy in OpenScene or the SAM model used by Chen et al.

我们的方法分层利用模态内相关性来促进点特征之间的紧凑性,从而减少预测歧义。我们的主要创新利用了模态内特征对齐,并且不与陈等人[4]的并发工作冲突,后者利用跨模态特征对齐从细化的伪标签中学习鲁棒的点特征。最后,我们无需额外的先验知识,例如 OpenScene 中的特征融合策略或 Chen 等人使用的 SAM 模型,就取得了最先进的结果。

4.3. Label-free 3D Semantic Segmentation

4.3. 无标签三维语义分割

We demonstrate the effectiveness of our hierarchical intra-modal correlation learning framework on both indoor dataset ScanNet and outdoor dataset nuScenes. In Table 1, we report the semantic segmentation metric ‘mIoU’ on ScanNet and nuScenes, as well as training time and model size for models trained on ScanNet. On the ScanNet dataset, our method outperforms all the state-of-the-art methods by achieving 36.6% mIoU, with a significant gain of 11.0% mIoU compared with CLIP2Scene [5] and 3.1% mIoU gain compared with Chen et al. [4]. Furthermore, the training expenses and model size of our methods are notably minimal. On the nuScenes dataset, we achieve 23.0% mIoU with a gain of 2.2% mIoU compared with CLIP2Scene. All these results show the superiority of our hierarchical intra-modal correlation learning framework. In Fig. 4, we present the qualitative results of our method and CLIP2Scene on the validation set of ScanNet. Note that Chen et al. [4] have not released code yet, so their visualization results are not presented. Compared with CLIP2Scene, our method produces more consistent predictions, such as the wall in the first row, the table in the second row, and the sofa in the last row. Besides, our method can make more accurate recognition for categories with limited training samples, like the sink in the first row and the cabinet in the second row. By employing our hierarchical intra-model correlation learning framework, the 3D model can generate more coherent and accurate semantic segmentation results.

我们在室内数据集 ScanNet 和室外数据集 nuScenes 上证明了我们分层模态内相关学习框架的有效性。在表 1 中,我们报告了 ScanNet 和 nuScenes 上的语义分割指标“mIoU”,以及在 ScanNet 上训练的模型的训练时间和模型大小。在 ScanNet 数据集上,我们的方法超越了所有最先进的方法,达到了 36.6% 的 mIoU,与 CLIP2Scene [5] 相比,mIoU 有 11.0% 的显著提升,与 Chen 等人 [4] 相比,mIoU 提升了 3.1%。此外,我们的方法的训练成本和模型大小都非常小。在nuScenes数据集上,我们实现了23.0%的mIoU,与CLIP2Scene相比,mIoU提高了2.2%。所有这些结果都表明了我们分层模态内相关学习框架的优越性。在图4中,我们展示了我们的方法和CLIP2Scene在ScanNet验证集上的定性结果。需要注意的是,Chen 等人 [4] 尚未发布代码,因此他们的可视化结果未在此展示。与 CLIP2Scene 相比,我们的方法产生了更加一致的预测,例如第一行的墙壁,第二行的桌子,以及最后一行沙发。此外,我们的方法可以对训练样本有限的类别进行更准确的识别,例如第一行的水槽和第二行的橱柜。通过采用分层模型内相关学习框架,3D模型可以生成更连贯和准确的语义分割结果。

Figure 4. Qualitative comparison for semantic segmentation of our method and CLIP2Scene [5] on the ScanNet dataset. More visualization results are reported in the supplementary material.

图 4. 我们方法和 CLIP2Scene [5] 在 ScanNet 数据集上进行语义分割的定性比较。更多可视化结果在补充材料中给出。

Figure 5. Visualization of pseudo-labels on nuScenes dataset.

图 5. nuScenes 数据集上伪标签的可视化。

We also observe that the improvement of our method on the nuScenes dataset is not as much as that on the ScanNet dataset. This is because the pseudo-labels generated from CLIP [22, 39] in the nuScenes dataset are notably deficient in quality. As shown in Fig. 5, despite the fact that intra-set refinement can improve the local consistency, the overall label quality from CLIP is so poor that erroneous labels constitute the majority. Consequently, the refined labels may not accurately depict the true categories of the points. In the meanwhile, Chen et al. [4] implement an additional step of training a 2D semantic segmentation model. Although this step is costly, it effectively corrects these erroneous labels, contributing to a more substantial improvement.

我们还观察到,我们的方法在nuScenes数据集上的改进不如在ScanNet数据集上的改进。这是因为从CLIP [22, 39] 在nuScenes数据集中生成的伪标签质量明显不足。如图 5 所示,尽管集合内细化可以提高局部一致性,但 CLIP 的整体标签质量非常差,错误标签占多数。因此,细化后的标签可能无法准确地描述点的真实类别。与此同时,陈等人[4]实施了额外的训练一个二维语义分割模型的步骤。尽管这一步骤代价高昂,但它有效地修正了这些错误标签,从而带来了更显著的改进。

4.4. Ablation Study

4.4.消融研究

To evaluate the effectiveness of different components of our hierarchical intra-modal correlation learning framework, we conduct a series of ablation experiments on the ScanNet dataset and results are shown in Table 2. All experiments are implemented on four NVIDIA V100 GPUs with a batch size of 4 over 120 training epochs. In EXP I, we reproduce CLIP2Scene [5] as our baseline model, achieving a mIoU of 28.8% on the ScanNet validation set.

为了评估我们分层模态内相关学习框架中不同组件的有效性,我们在ScanNet数据集上进行了一系列消融实验,结果如表2所示。所有实验都在四块NVIDIA V100 GPU上实现,批次大小为4,训练120个epoch。在EXP I中,我们重现了CLIP2Scene [5]作为我们的基线模型,在ScanNet验证集上获得了28.8%的mIoU。

Table 2. Ablation study of our hierarchical intra-modal correlation learning framework on the ScanNet validation set. ‘FB’ denotes the feedback distillation mechanism.

表 2. 在 ScanNet 验证集上对我们分层模态内相关学习框架进行消融研究。‘FB’ 表示反馈蒸馏机制。

Ablation of intra-set label refinement. In EXP II, we cluster the input point cloud into geometric consistent sets and perform label refinement in each set, leading to a mIoU of 31.1% and a gain of 2.3% mIoU compared to EXP I. In Fig. 3, we demonstrate that intra-set label refinement can produce cleaner pseudo-labels, thus improving the coherence of the 3D model’s predictions.

去除集内标签细化。在 EXP II 中,我们将输入点云聚集成几何一致的集合,并在每个集合中执行标签细化,从而获得 31.1% 的 mIoU,与 EXP I 相比提高了 2.3% 的 mIoU。图 3 展示了集内标签细化可以生成更干净的伪标签,从而提高 3D 模型预测的一致性。

Ablation of intra-scene correlation learning. In EXP III, we incorporate the intra-scene correlation learning module based on EXP II, resulting in a higher gain of 3.3% mIoU. As shown in the second column of Fig. 7, the intra-scene correlation learning enables the model to learn a more concentrated and concise feature space, thereby reducing confusion between different categories.

场景内相关性学习的消融实验。在 EXP III 中,我们在 EXP II 的基础上加入了场景内相关性学习模块,从而获得了 3.3% 的 mIoU 提升。如图 7 第二列所示,场景内相关性学习使模型能够学习到更加集中和简洁的特征空间,从而减少了不同类别之间的混淆。

Figure 6. Semantic segmentation results of our method and CLIP2Scene [5] on ScanNet across different training epochs.

图 6. 我们的方法和 CLIP2Scene [5] 在 ScanNet 上不同训练轮次下的语义分割结果。

坚持阅读,收获满满!免费翻译额度剩余约30%

Ablation of inter-scene correlation learning. Building on EXP III, we additionally employ the inter-scene correlation learning module in EXP IV, yielding a gain of 7.3% mIoU. As shown in the third row of Fig. 7, this strategy further constrains that the feature spaces of multiple scenes should be consistent, assisting the model in generating stable feature distributions in different scenes.

场景间相关性学习的消融实验。在 EXP III 的基础上,我们在 EXP IV 中额外采用了场景间相关性学习模块,获得了 7.3% 的 mIoU 提升。如图 7 第三行所示,该策略进一步约束了多个场景的特征空间应保持一致,帮助模型在不同场景中生成稳定的特征分布。

坚持阅读,收获满满!免费翻译额度剩余约30%

Ablation of feedback distillation. In EXP V, we implement the feedback distillation mechanism and achieve a mIoU of 36.6%, with a total gain of 7.8% mIoU compared with CLIP2Scene. By aligning the feature space, we can transfer the correlation learning capacity into the 3D model, further enhancing its performance.

反馈蒸馏的消融研究。在 EXP V 中,我们实现了反馈蒸馏机制,并取得了 36.6% 的 mIoU,与 CLIP2Scene 相比,总共提高了 7.8% 的 mIoU。通过对特征空间进行对齐,我们可以将相关性学习能力转移到 3D 模型中,从而进一步提高其性能。

坚持阅读,收获满满!免费翻译额度剩余约30%

Ablation of training epochs. We compare the segmentation performance of our method with CLIP2Scene [5] as the number of training epochs increases from 30 to 120. The results are presented in Fig. 6. Our method consistently outperforms CLIP2Scene across various training epochs, achieving a maximum mIoU gain of 7.8% at the 120th epoch. Additionally, our method shows a better upward trend with a 1.4% mIoU increase from 100 to 120 epochs, compared to only a 0.3% mIoU increase exhibited by CLIP2Scene across the same epoch range.

训练轮次的消融实验。我们比较了我们的方法与 CLIP2Scene [5] 在训练轮次从 30 增加到 120 时,分割性能的变化。结果如图 6 所示。我们的方法在各种训练轮次中始终优于 CLIP2Scene,在第 120 轮次时,mIoU 提升了 7.8%。此外,我们的方法在 100 到 120 个 epoch 之间表现出更好的上升趋势,mIoU 提高了 1.4%,而 CLIP2Scene 在相同 epoch 范围内仅提高了 0.3%。

坚持阅读,收获满满!免费翻译额度剩余约30%

Additional ablation studies of geometric consistency sets are detailed in Section 3 of the supplementary material.

补充材料的第 3 节详细介绍了几何一致性集的额外消融研究。

坚持阅读,收获满满!免费翻译额度剩余约30%

4.5. Feature Space Visualization

4.5. 特征空间可视化

坚持阅读,收获满满!免费翻译额度剩余约30%

Our hierarchical intra-modal correlation learning framework aims to create a concise feature space. To validate this, we compare the t-SNE visualizations of the feature space from our method and CLIP2Scene [5] in Fig. 7. The first two rows show the t-SNE map of two unique scenes. We present the feature spaces separately for CLIP2Scene, our method that incorporates intra-scene correlation learning, and our method that integrates inter-scene correlation learning. Compared to CLIP2Scene, both the intra- and inter-scene strategies assist the 3D model in learning a more focused feature space with fewer conflicts, as indicated by the dotted box. This verifies the effectiveness of introducing point correlations to help concentrate point features. In the last row of Fig. 7, we concatenate point features from the two aforementioned scenes to verify the consistency of feature distributions across different scenes. Compared to CLIP2Scene and the intra-scene correlation learning strategy, our inter-scene setting results in a more stable feature space across diverse scenes. This indicates that incorporating global correlations across multiple scenes can enhance the alignment of feature distributions in different scenes and help the 3D model produce more robust predictions.

我们的分层模态内相关学习框架旨在创建一个简洁的特征空间。为了验证这一点,我们在图 7 中比较了我们方法和 CLIP2Scene [5] 的特征空间的 t-SNE 可视化结果。前两行显示了两个独特场景的 t-SNE 图。我们分别展示了 CLIP2Scene 的特征空间,我们的方法包含场景内相关性学习,以及我们整合了场景间相关性学习的方法。与 CLIP2Scene 相比,场景内和场景间策略都帮助 3D 模型学习到更集中的特征空间,冲突更少,如虚线框所示。这验证了引入点相关性以帮助集中点特征的有效性。在图 7 的最后一行,我们将来自上述两个场景的点特征连接起来,以验证不同场景中特征分布的一致性。与 CLIP2Scene 和场景内相关性学习策略相比,我们的场景间设置在不同场景中产生了更稳定的特征空间。这表明,将多个场景的全局相关性纳入可以增强不同场景中特征分布的对齐,并帮助 3D 模型产生更稳健的预测。

坚持阅读,收获满满!免费翻译额度剩余约30%

Figure 7. Feature space visualization. We visualize the learned point features of our method and CLIP2Scene [5] using t-SNE maps. The first two rows are t-SNE maps of two distinct scenes and the last row shows the t-SNE map of the concatenated features from these two scenes. Refrig and S curtain are the abbreviations for refrigerator and shower curtain, respectively.

图 7. 特征空间可视化。我们使用 t-SNE 图可视化了我们方法和 CLIP2Scene [5] 学习到的点特征。前两行是两个不同场景的 t-SNE 图,最后一行显示了这两个场景的拼接特征的 t-SNE 图。Refrig 和 S curtain 分别是冰箱和淋浴帘的缩写。

坚持阅读,收获满满!免费翻译额度剩余约30%

5. Limitations and Future Work

5. 限制与未来工作

坚持阅读,收获满满!免费翻译额度剩余约30%

Our work demonstrates that exploring intra-modal correlations can help the 3D model training for label-free 3D semantic segmentation. However, we have not yet explored intra-modal correlations within images and texts. By using correlations in images, we can create a more consistent 2D feature space, leading to more accurate and coherent pseudo-labels. Similarly, investigating correlations within texts enables us to use more precise and detailed descriptions, offering rich guidance for cross-modal alignment. In the future, we will extend our hierarchical intra-modal correlation learning framework to images and texts to achieve better label-free 3D semantic segmentation performance.

我们的工作表明,探索模态内相关性可以帮助进行无标签 3D 语义分割的 3D 模型训练。然而,我们尚未探索图像和文本内的模态内相关性。通过利用图像中的相关性,我们可以创建更一致的二维特征空间,从而生成更准确和连贯的伪标签。类似地,研究文本内部的相关性使我们能够使用更精确和详细的描述,为跨模态对齐提供丰富的指导。未来,我们将扩展我们分层模态内相关性学习框架到图像和文本,以实现更好的无标签3D语义分割性能。

坚持阅读,收获满满!免费翻译额度剩余约30%

6. Conclusion

6. 结论

坚持阅读,收获满满!免费翻译额度剩余约30%

In this paper, we introduce a hierarchical intra-modal correlation learning framework for label-free 3D semantic segmentation. Our method hierarchically utilizes visual and geometric correlations at three scales including intraset, intra-scene, and inter-scene, to help mitigate noise in pseudo-labels and concentrate point features, resulting in a focused and concise 3D feature space with fewer conflicts. Experiments on both indoor and outdoor datasets demonstrate the superiority of our method. Comprehensive theoretical analysis and extensive ablation studies further support the effectiveness of our framework.

本文提出了一种用于无标签三维语义分割的分层模内相关学习框架。我们的方法在三个尺度上分层利用视觉和几何相关性,包括集内、场景内和场景间,以帮助减轻伪标签中的噪声并集中点特征,从而形成一个更集中、简洁且冲突更少的3D特征空间。在室内和室外数据集上的实验表明了我们方法的优越性。全面的理论分析和广泛的消融研究进一步支持了我们框架的有效性。

坚持阅读,收获满满!免费翻译额度剩余约30%

References

参考文献

坚持阅读,收获满满!免费翻译额度剩余约30%

[1] Iro Armeni, Zhi-Yang He, JunYoung Gwak, Amir R Zamir, Martin Fischer, Jitendra Malik, and Silvio Savarese. 3d scene graph: A structure for unified semantics, 3d space, and camera. In Proceedings of the IEEE/CVF international conference on computer vision, pages 5664–5673, 2019. 3

[2] Holger Caesar, Varun Bankiti, Alex H. Lang, Sourabh Vora, Venice Erin Liong, Qiang Xu, Anush Krishnan, Yu Pan, Giancarlo Baldan, and Oscar Beijbom. nuscenes: A multimodal dataset for autonomous driving. In CVPR, 2020. 5

[3] Nenglun Chen, Lei Chu, Hao Pan, Yan Lu, and Wenping Wang. Self-supervised image representation learning with geometric set consistency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19292–19302, 2022. 4

[4] Runnan Chen, Youquan Liu, Lingdong Kong, Nenglun Chen, ZHU Xinge, Yuexin Ma, Tongliang Liu, and Wenping Wang. Towards label-free scene understanding by vision foundation models. In Thirty-seventh Conference on Neural Information Processing Systems, 2023. 1, 2, 3, 6, 7

[5] Runnan Chen, Youquan Liu, Lingdong Kong, Xinge Zhu, Yuexin Ma, Yikang Li, Yuenan Hou, Yu Qiao, and Wenping Wang. Clip2scene: Towards label-efficient 3d scene understanding by clip. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7020–7030, 2023. 1, 2, 3, 6, 7, 8

[6] Christopher Choy, JunYoung Gwak, and Silvio Savarese. 4d spatio-temporal convnets: Minkowski convolutional neural networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 3075–3084, 2019. 3, 6

[7] Angela Dai, Angel X. Chang, Manolis Savva, Maciej Halber, Thomas Funkhouser, and Matthias Nießner. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proc. Computer Vision and Pattern Recognition (CVPR), IEEE, 2017. 5

[8] Runyu Ding, Jihan Yang, Chuhui Xue, Wenqing Zhang, Song Bai, and Xiaojuan Qi. Pla: Language-driven openvocabulary 3d scene understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7010–7019, 2023. 1, 2

[9] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020. 3, 6

[10] Nico Engel, Vasileios Belagiannis, and Klaus Dietmayer. Point transformer. IEEE access, 9:134826–134840, 2021. 3

[11] Pedro F Felzenszwalb and Daniel P Huttenlocher. Efficient graph-based image segmentation. International journal of computer vision, 59:167–181, 2004. 4

[12] Ben Graham. Sparse 3d convolutional neural networks. arXiv preprint arXiv:1505.02890, 2015. 3

[13] Benjamin Graham, Martin Engelcke, and Laurens Van Der Maaten. 3d semantic segmentation with submanifold sparse convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 9224–9232, 2018. 3

[14] Benjamin Graham and Laurens van der Maaten. Submanifold sparse convolutional networks. arXiv preprint arXiv:1706.01307, 2017. 3

[15] Zhao Jin, Munawar Hayat, Yuwei Yang, Yulan Guo, and Yinjie Lei. Context-aware alignment and mutual masking for 3dlanguage pre-training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10984–10994, 2023. 1, 2

[16] Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C Berg, Wan-Yen Lo, et al. Segment anything. arXiv preprint arXiv:2304.02643, 2023. 2, 4, 6

[17] Xin Lai, Jianhui Liu, Li Jiang, Liwei Wang, Hengshuang Zhao, Shu Liu, Xiaojuan Qi, and Jiaya Jia. Stratified transformer for 3d point cloud segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8500–8509, 2022. 3

[18] Yueh-Cheng Liu, Yu-Kai Huang, Hung-Yueh Chiang, HungTing Su, Zhe-Yu Liu, Chin-Tang Chen, Ching-Yu Tseng, and Winston H Hsu. Learning from 2d: Contrastive pixel-topoint knowledge transfer for 3d pretraining. arXiv preprint arXiv:2104.04687, 2021. 2

[19] Yuheng Lu, Chenfeng Xu, Xiaobao Wei, Xiaodong Xie, Masayoshi Tomizuka, Kurt Keutzer, and Shanghang Zhang. Open-vocabulary point-cloud object detection without 3d annotation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1190– 1199, 2023. 1, 2

[20] Jeremie Papon, Alexey Abramov, Markus Schoeler, and Florentin Worgotter. Voxel cloud connectivity segmentationsupervoxels for point clouds. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2027–2034, 2013. 4

[21] Songyou Peng, Kyle Genova, Chiyu Jiang, Andrea Tagliasacchi, Marc Pollefeys, Thomas Funkhouser, et al. Openscene: 3d scene understanding with open vocabularies. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 815–824, 2023. 1, 2, 6

[22] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021. 1, 2, 7

[23] Corentin Sautier, Gilles Puy, Spyros Gidaris, Alexandre Boulch, Andrei Bursuc, and Renaud Marlet. Image-to-lidar self-supervised distillation for autonomous driving data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9891–9901, 2022. 2

[24] Haotian Tang, Zhijian Liu, Shengyu Zhao, Yujun Lin, Ji Lin, Hanrui Wang, and Song Han. Searching efficient 3d architectures with sparse point-voxel convolution. In European conference on computer vision, pages 685–702. Springer, 2020. 6

[25] Johanna Wald, Helisa Dhamo, Nassir Navab, and Federico Tombari. Learning 3d semantic scene graphs from 3d indoor reconstructions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3961–3970, 2020. 3

[26] Peng-Shuai Wang. Octformer: Octree-based transformers for 3d point clouds. arXiv preprint arXiv:2305.03045, 2023. 3

[27] Peng-Shuai Wang, Yang Liu, Yu-Xiao Guo, Chun-Yu Sun, and Xin Tong. O-cnn: Octree-based convolutional neural networks for 3d shape analysis. ACM Transactions On Graphics (TOG), 36(4):1–11, 2017. 3

[28] Ziqin Wang, Bowen Cheng, Lichen Zhao, Dong Xu, Yang Tang, and Lu Sheng. Vl-sat: Visual-linguistic semantics assisted training for 3d semantic scene graph prediction in point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 21560– 21569, 2023. 3

[29] Xiaoyang Wu, Yixing Lao, Li Jiang, Xihui Liu, and Hengshuang Zhao. Point transformer v2: Grouped vector attention and partition-based pooling. Advances in Neural Information Processing Systems, 35:33330–33342, 2022. 3

[30] Danfei Xu, Yuke Zhu, Christopher B Choy, and Li Fei-Fei. Scene graph generation by iterative message passing. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 5410–5419, 2017. 3

[31] Le Xue, Mingfei Gao, Chen Xing, Roberto Mart´ın-Mart´ın, Jiajun Wu, Caiming Xiong, Ran Xu, Juan Carlos Niebles, and Silvio Savarese. Ulip: Learning a unified representation of language, images, and point clouds for 3d understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1179–1189, 2023. 1, 2

[32] Xu Yan, Jiantao Gao, Chaoda Zheng, Chao Zheng, Ruimao Zhang, Shuguang Cui, and Zhen Li. 2dpass: 2d priors assisted semantic segmentation on lidar point clouds. In European Conference on Computer Vision, pages 677–695. Springer, 2022. 2

[33] Jingkang Yang, Yi Zhe Ang, Zujin Guo, Kaiyang Zhou, Wayne Zhang, and Ziwei Liu. Panoptic scene graph generation. In European Conference on Computer Vision, pages 178–196. Springer, 2022. 3

[34] Jianwei Yang, Jiasen Lu, Stefan Lee, Dhruv Batra, and Devi Parikh. Graph r-cnn for scene graph generation. In Proceedings of the European conference on computer vision (ECCV), pages 670–685, 2018. 3

[35] Yu-Qi Yang, Yu-Xiao Guo, Jian-Yu Xiong, Yang Liu, Hao Pan, Peng-Shuai Wang, Xin Tong, and Baining Guo. Swin3d: A pretrained transformer backbone for 3d indoor scene understanding. arXiv preprint arXiv:2304.06906, 2023. 3

[36] Junbo Yin, Dingfu Zhou, Liangjun Zhang, Jin Fang, ChengZhong Xu, Jianbing Shen, and Wenguan Wang. Proposalcontrast: Unsupervised pre-training for lidar-based 3d object detection. In European Conference on Computer Vision, pages 17–33. Springer, 2022. 1, 2

[37] Renrui Zhang, Ziyu Guo, Wei Zhang, Kunchang Li, Xupeng Miao, Bin Cui, Yu Qiao, Peng Gao, and Hongsheng Li. Pointclip: Point cloud understanding by clip. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8552–8562, 2022. 1, 2

[38] Hengshuang Zhao, Li Jiang, Jiaya Jia, Philip HS Torr, and Vladlen Koltun. Point transformer. In Proceedings of the IEEE/CVF international conference on computer vision, pages 16259–16268, 2021. 3

[39] Chong Zhou, Chen Change Loy, and Bo Dai. Extract free dense labels from clip. In European Conference on Computer Vision, pages 696–712. Springer, 2022. 1, 2, 3, 6, 7

[40] Xiangyang Zhu, Renrui Zhang, Bowei He, Ziyao Zeng, Shanghang Zhang, and Peng Gao. Pointclip v2: Adapting clip for powerful 3d open-world learning. arXiv preprint arXiv:2211.11682, 2022. 1, 2

参考文献

[28] Ziqin Wang, Bowen Cheng, Lichen Zhao, Dong Xu, Yang Tang, and Lu Sheng. Vl-sat: Visual-linguistic semantics assisted training for 3d semantic scene graph prediction in point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 21560– 21569, 2023. 3

添加至列表

摘要

The task of 3D semantic scene graph (3D SSG) prediction in the point cloud is challenging since (1) the 3D point cloud only captures geometric structures with limited semantics compared to 2D images, and (2) long-tailed relation distribution inherently hinders the learning of unbiased prediction. Since 2D images provide rich semantics and scene graphs are in nature coped with languages, in this study, we propose Visual-Linguistic Semantics Assisted Training (VL-SAT) scheme that can significantly empower 3DSSG prediction models with discrimination about long-tailed and ambiguous semantic relations. The key idea is to train a powerful multi-modal oracle model to assist the 3D model. This oracle learns reliable structural representations based on semantics from vision, language, and 3D geometry, and its benefits can be heterogeneously passed to the 3D model during the training stage. By effectively utilizing visual-linguistic semantics in training, our VL-SAT can significantly boost common 3DSSG prediction models, such as SGFN and SGGpoint, only with 3D inputs in the inference stage, especially when dealing with tail relation triplets. Comprehensive evaluations and ablation studies on the 3DSSG dataset have validated the effectiveness of the proposed scheme. Code is available at https://github.com/wz7in/CVPR2023-VLSAT.

-

Hierarchical Intra-modal Correlation Learning for Label-free 3D Semantic Segmentation

1

-

Abstract

1

-

1. Introduction

1

-

2. Related Work

2

-

2.1. Label-free 3D Semantic Segmentation

2

-

2.2. Scene Context Learning

3

-

3. Method

3

-

3.1. Cross-modal Transfer Learning

3

-

3.2. Hierarchical Scene Correlation Learning

3

-

3.3. Feedback Distillation

5

-

3.4. Overall Objective

5

-

4. Experiment

5

-

4.1. Experiment Setup

5

-

4.2. Comparison Methods

6

-

4.3. Label-free 3D Semantic Segmentation

6

-

4.4. Ablation Study

7

-

4.5. Feature Space Visualization

8

-

5. Limitations and Future Work

8

-

6. Conclusion

8

-

Skip to where you left off

9 / 10

-

References

9

目录

图表

翻译

笔记

复制

重新翻译

![[C++]vc6.0在win10或者win11上下载安装和简单使用教程](https://i-blog.csdnimg.cn/direct/bd4026cf3ee7488fa8f7c192cfd2820c.png)