1. 考虑 torch 版本 与 SGLang 不兼容:

-> Python环境中的包被更新(如torch, deepspeed, transformers等)导致不兼容 -

参考:Exception: Capture CUDA graph failed: CUDA error: out of memory-CSDN博客

# 虽然锁定了 sglang 版本,但未锁定其依赖项(如 PyTorch、transformers 等)

pip install "sglang[all]>=0.4.6.post5" 2. 添加外部网络参数,卡死

avail_mem=0.00 GB

解决方法:添加 --disable-cuda-graph

参考:

[Bug] ROCm6.1.2 sglang0.3.3 cuda graph coredump · Issue #1683 · sgl-project/sglang · GitHub[Bug] Exception: Capture cuda graph failed: Could not run 'sgl_kernel::rmsnorm' with arguments from the 'CUDA' backend. · Issue #6322 · sgl-project/sglang · GitHub

python3 -m sglang.launch_server --model ./deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B --trust-remote-code --tp 1 --disable-cuda-graph[Bug] OOM for concurrent long requests · Issue #1030 · sgl-project/sglang · GitHub

实际使用出错

错误信息:

(myenv) ubun22:/mnt/c/Users/lms/Desktop/LLaVA-main/myserver$ python3 -m sglang.launch_server --model deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B --trust-remote-code --tp 1 --host 0.0.0.0 --port 30000

[2025-06-01 00:01:32,639] [INFO] [real_accelerator.py:161:get_accelerator] Setting ds_accelerator to cuda (auto detect)

/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:49: FutureWarning: `torch.cuda.amp.custom_fwd(args...)` is deprecated. Please use `torch.amp.custom_fwd(args..., device_type='cuda')` instead.def forward(ctx, input, weight, bias=None):

/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:67: FutureWarning: `torch.cuda.amp.custom_bwd(args...)` is deprecated. Please use `torch.amp.custom_bwd(args..., device_type='cuda')` instead.def backward(ctx, grad_output):

[2025-06-01 00:01:55] server_args=ServerArgs(model_path='deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B', tokenizer_path='deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B', tokenizer_mode='auto', skip_tokenizer_init=False, load_format='auto', trust_remote_code=True, dtype='auto', kv_cache_dtype='auto', quantization=None, quantization_param_path=None, context_length=None, device='cuda', served_model_name='deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B', chat_template=None, completion_template=None, is_embedding=False, enable_multimodal=None, revision=None, host='0.0.0.0', port=30000, mem_fraction_static=0.88, max_running_requests=None, max_total_tokens=None, chunked_prefill_size=2048, max_prefill_tokens=16384, schedule_policy='fcfs', schedule_conservativeness=1.0, cpu_offload_gb=0, page_size=1, tp_size=1, pp_size=1, max_micro_batch_size=None, stream_interval=1, stream_output=False, random_seed=314452693, constrained_json_whitespace_pattern=None, watchdog_timeout=300, dist_timeout=None, download_dir=None, base_gpu_id=0, gpu_id_step=1, log_level='info', log_level_http=None, log_requests=False, log_requests_level=0, show_time_cost=False, enable_metrics=False, bucket_time_to_first_token=None, bucket_e2e_request_latency=None, bucket_inter_token_latency=None, collect_tokens_histogram=False, decode_log_interval=40, enable_request_time_stats_logging=False, kv_events_config=None, api_key=None, file_storage_path='sglang_storage', enable_cache_report=False, reasoning_parser=None, dp_size=1, load_balance_method='round_robin', ep_size=1, dist_init_addr=None, nnodes=1, node_rank=0, json_model_override_args='{}', preferred_sampling_params=None, lora_paths=None, max_loras_per_batch=8, lora_backend='triton', attention_backend=None, sampling_backend='flashinfer', grammar_backend='xgrammar', speculative_algorithm=None, speculative_draft_model_path=None, speculative_num_steps=None, speculative_eagle_topk=None, speculative_num_draft_tokens=None, speculative_accept_threshold_single=1.0, speculative_accept_threshold_acc=1.0, speculative_token_map=None, enable_double_sparsity=False, ds_channel_config_path=None, ds_heavy_channel_num=32, ds_heavy_token_num=256, ds_heavy_channel_type='qk', ds_sparse_decode_threshold=4096, disable_radix_cache=False, disable_cuda_graph=False, disable_cuda_graph_padding=False, enable_nccl_nvls=False, enable_tokenizer_batch_encode=False, disable_outlines_disk_cache=False, disable_custom_all_reduce=False, disable_overlap_schedule=False, enable_mixed_chunk=False, enable_dp_attention=False, enable_dp_lm_head=False, enable_ep_moe=False, enable_deepep_moe=False, deepep_mode='auto', ep_num_redundant_experts=0, ep_dispatch_algorithm=None, init_expert_location='trivial', enable_eplb=False, eplb_rebalance_num_iterations=1000, expert_distribution_recorder_mode=None, expert_distribution_recorder_buffer_size=None, enable_expert_distribution_metrics=False, deepep_config=None, enable_torch_compile=False, torch_compile_max_bs=32, cuda_graph_max_bs=8, cuda_graph_bs=None, torchao_config='', enable_nan_detection=False, enable_p2p_check=False, triton_attention_reduce_in_fp32=False, triton_attention_num_kv_splits=8, num_continuous_decode_steps=1, delete_ckpt_after_loading=False, enable_memory_saver=False, allow_auto_truncate=False, enable_custom_logit_processor=False, tool_call_parser=None, enable_hierarchical_cache=False, hicache_ratio=2.0, hicache_size=0, hicache_write_policy='write_through_selective', flashinfer_mla_disable_ragged=False, warmups=None, moe_dense_tp_size=None, n_share_experts_fusion=0, disable_chunked_prefix_cache=False, disable_fast_image_processor=False, mm_attention_backend=None, debug_tensor_dump_output_folder=None, debug_tensor_dump_input_file=None, debug_tensor_dump_inject=False, disaggregation_mode='null', disaggregation_bootstrap_port=8998, disaggregation_transfer_backend='mooncake', disaggregation_ib_device=None, pdlb_url=None)

[2025-06-01 00:03:16,258] [INFO] [real_accelerator.py:161:get_accelerator] Setting ds_accelerator to cuda (auto detect)

[2025-06-01 00:03:16,258] [INFO] [real_accelerator.py:161:get_accelerator] Setting ds_accelerator to cuda (auto detect)

/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:49: FutureWarning: `torch.cuda.amp.custom_fwd(args...)` is deprecated. Please use `torch.amp.custom_fwd(args..., device_type='cuda')` instead.def forward(ctx, input, weight, bias=None):

/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:67: FutureWarning: `torch.cuda.amp.custom_bwd(args...)` is deprecated. Please use `torch.amp.custom_bwd(args..., device_type='cuda')` instead.def backward(ctx, grad_output):

/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:49: FutureWarning: `torch.cuda.amp.custom_fwd(args...)` is deprecated. Please use `torch.amp.custom_fwd(args..., device_type='cuda')` instead.def forward(ctx, input, weight, bias=None):

/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:67: FutureWarning: `torch.cuda.amp.custom_bwd(args...)` is deprecated. Please use `torch.amp.custom_bwd(args..., device_type='cuda')` instead.def backward(ctx, grad_output):

[2025-06-01 00:03:26] Attention backend not set. Use flashinfer backend by default.

[2025-06-01 00:03:26] Init torch distributed begin.

[2025-06-01 00:03:27] Init torch distributed ends. mem usage=0.00 GB

[2025-06-01 00:03:27] init_expert_location from trivial

[2025-06-01 00:04:01] Ignore import error when loading sglang.srt.models.deepseek_janus_pro. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:03] Ignore import error when loading sglang.srt.models.gemma3_causal. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:03] Ignore import error when loading sglang.srt.models.gemma3_mm. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:04] Ignore import error when loading sglang.srt.models.internvl. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:05] Ignore import error when loading sglang.srt.models.kimi_vl. cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:05] Ignore import error when loading sglang.srt.models.kimi_vl_moonvit. cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:06] Ignore import error when loading sglang.srt.models.llava. Failed to import transformers.models.clip.modeling_clip because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:07] Ignore import error when loading sglang.srt.models.llavavid. Failed to import transformers.models.clip.modeling_clip because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:07] Ignore import error when loading sglang.srt.models.minicpmo. Failed to import transformers.models.llama.modeling_llama because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:08] Ignore import error when loading sglang.srt.models.mistral. cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:09] Ignore import error when loading sglang.srt.models.mllama. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:09] Ignore import error when loading sglang.srt.models.mllama4. Failed to import transformers.models.llama4.modeling_llama4 because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:10] Ignore import error when loading sglang.srt.models.pixtral. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:11] Ignore import error when loading sglang.srt.models.qwen2_5_vl. cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 00:04:11] Ignore import error when loading sglang.srt.models.yivl. Failed to import transformers.models.clip.modeling_clip because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/mnt/c/Users/lms/Desktop/LLaVA-main/myenv/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

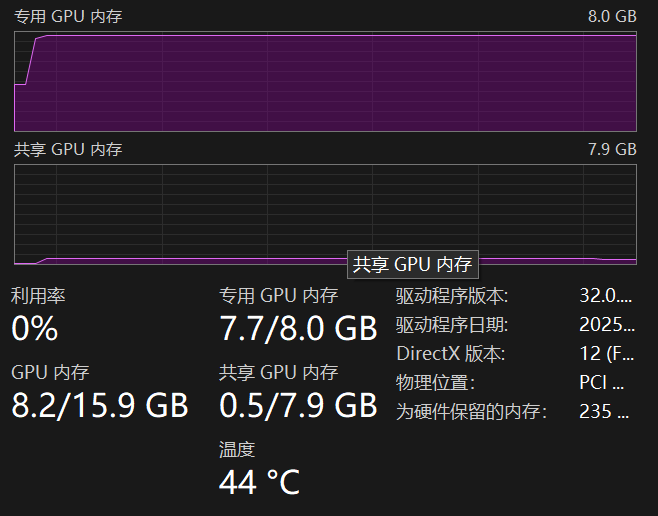

[2025-06-01 00:04:15] Load weight begin. avail mem=6.92 GB

Loading safetensors checkpoint shards: 0% Completed | 0/1 [00:00<?, ?it/s]

Loading safetensors checkpoint shards: 100% Completed | 1/1 [06:04<00:00, 364.60s/it]

Loading safetensors checkpoint shards: 100% Completed | 1/1 [06:04<00:00, 364.60s/it][2025-06-01 00:10:22] Load weight end. type=Qwen2ForCausalLM, dtype=torch.bfloat16, avail mem=3.41 GB, mem usage=3.51 GB.

[2025-06-01 00:10:23] KV Cache is allocated. #tokens: 96589, K size: 1.29 GB, V size: 1.29 GB

[2025-06-01 00:10:23] Memory pool end. avail mem=0.00 GB

2025-06-01 00:10:26,469 - INFO - flashinfer.jit: Prebuilt kernels not found, using JIT backend

[2025-06-01 00:10:26] Capture cuda graph begin. This can take up to several minutes. avail mem=0.00 GB

[2025-06-01 00:10:27] Capture cuda graph bs [1, 2, 4, 8]

Capturing batches (avail_mem=0.00 GB): 0%| | 0/4 [00:00<?, ?it/s]2025-06-01 00:10:35,809 - INFO - flashinfer.jit: Loading JIT ops: batch_prefill_with_kv_cache_dtype_q_bf16_dtype_kv_bf16_dtype_o_bf16_dtype_idx_i32_head_dim_qk_128_head_dim_vo_128_posenc_0_use_swa_False_use_logits_cap_False_f16qk_False

[2025-06-01 00:10:50] Child process unexpectedly failed with an exit code 9. pid=114785成功部署

ubun22:/mnt/c/Users/lms/Desktop/LLaVA-main/myserver$ python3 -m sglang.launch_server --model ./deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B --trust-remote-code --tp 1 --disable-cuda-graph

INFO 06-01 01:24:55 [__init__.py:243] Automatically detected platform cuda.

[2025-06-01 01:24:57,256] [INFO] [real_accelerator.py:161:get_accelerator] Setting ds_accelerator to cuda (auto detect)

/home/ubun22/.local/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:49: FutureWarning: `torch.cuda.amp.custom_fwd(args...)` is deprecated. Please use `torch.amp.custom_fwd(args..., device_type='cuda')` instead.def forward(ctx, input, weight, bias=None):

/home/ubun22/.local/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:67: FutureWarning: `torch.cuda.amp.custom_bwd(args...)` is deprecated. Please use `torch.amp.custom_bwd(args..., device_type='cuda')` instead.def backward(ctx, grad_output):

INFO 06-01 01:24:57 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:24:57 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:24:57 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:24:58 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:24:58 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:24:58 [__init__.py:243] Automatically detected platform cuda.

[2025-06-01 01:25:01] server_args=ServerArgs(model_path='./deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B', tokenizer_path='./deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B', tokenizer_mode='auto', skip_tokenizer_init=False, load_format='auto', trust_remote_code=True, dtype='auto', kv_cache_dtype='auto', quantization=None, quantization_param_path=None, context_length=None, device='cuda', served_model_name='./deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B', chat_template=None, completion_template=None, is_embedding=False, enable_multimodal=None, revision=None, host='127.0.0.1', port=30000, mem_fraction_static=0.88, max_running_requests=None, max_total_tokens=None, chunked_prefill_size=2048, max_prefill_tokens=16384, schedule_policy='fcfs', schedule_conservativeness=1.0, cpu_offload_gb=0, page_size=1, tp_size=1, pp_size=1, max_micro_batch_size=None, stream_interval=1, stream_output=False, random_seed=201101802, constrained_json_whitespace_pattern=None, watchdog_timeout=300, dist_timeout=None, download_dir=None, base_gpu_id=0, gpu_id_step=1, log_level='info', log_level_http=None, log_requests=False, log_requests_level=0, show_time_cost=False, enable_metrics=False, bucket_time_to_first_token=None, bucket_e2e_request_latency=None, bucket_inter_token_latency=None, collect_tokens_histogram=False, decode_log_interval=40, enable_request_time_stats_logging=False, kv_events_config=None, api_key=None, file_storage_path='sglang_storage', enable_cache_report=False, reasoning_parser=None, dp_size=1, load_balance_method='round_robin', ep_size=1, dist_init_addr=None, nnodes=1, node_rank=0, json_model_override_args='{}', preferred_sampling_params=None, lora_paths=None, max_loras_per_batch=8, lora_backend='triton', attention_backend=None, sampling_backend='flashinfer', grammar_backend='xgrammar', speculative_algorithm=None, speculative_draft_model_path=None, speculative_num_steps=None, speculative_eagle_topk=None, speculative_num_draft_tokens=None, speculative_accept_threshold_single=1.0, speculative_accept_threshold_acc=1.0, speculative_token_map=None, enable_double_sparsity=False, ds_channel_config_path=None, ds_heavy_channel_num=32, ds_heavy_token_num=256, ds_heavy_channel_type='qk', ds_sparse_decode_threshold=4096, disable_radix_cache=False, disable_cuda_graph=True, disable_cuda_graph_padding=False, enable_nccl_nvls=False, enable_tokenizer_batch_encode=False, disable_outlines_disk_cache=False, disable_custom_all_reduce=False, disable_overlap_schedule=False, enable_mixed_chunk=False, enable_dp_attention=False, enable_dp_lm_head=False, enable_ep_moe=False, enable_deepep_moe=False, deepep_mode='auto', ep_num_redundant_experts=0, ep_dispatch_algorithm=None, init_expert_location='trivial', enable_eplb=False, eplb_rebalance_num_iterations=1000, expert_distribution_recorder_mode=None, expert_distribution_recorder_buffer_size=None, enable_expert_distribution_metrics=False, deepep_config=None, enable_torch_compile=False, torch_compile_max_bs=32, cuda_graph_max_bs=8, cuda_graph_bs=None, torchao_config='', enable_nan_detection=False, enable_p2p_check=False, triton_attention_reduce_in_fp32=False, triton_attention_num_kv_splits=8, num_continuous_decode_steps=1, delete_ckpt_after_loading=False, enable_memory_saver=False, allow_auto_truncate=False, enable_custom_logit_processor=False, tool_call_parser=None, enable_hierarchical_cache=False, hicache_ratio=2.0, hicache_size=0, hicache_write_policy='write_through_selective', flashinfer_mla_disable_ragged=False, warmups=None, moe_dense_tp_size=None, n_share_experts_fusion=0, disable_chunked_prefix_cache=False, disable_fast_image_processor=False, mm_attention_backend=None, debug_tensor_dump_output_folder=None, debug_tensor_dump_input_file=None, debug_tensor_dump_inject=False, disaggregation_mode='null', disaggregation_bootstrap_port=8998, disaggregation_transfer_backend='mooncake', disaggregation_ib_device=None, pdlb_url=None)

INFO 06-01 01:25:06 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:06 [__init__.py:243] Automatically detected platform cuda.

[2025-06-01 01:25:07,689] [INFO] [real_accelerator.py:161:get_accelerator] Setting ds_accelerator to cuda (auto detect)

[2025-06-01 01:25:07,689] [INFO] [real_accelerator.py:161:get_accelerator] Setting ds_accelerator to cuda (auto detect)

/home/ubun22/.local/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:49: FutureWarning: `torch.cuda.amp.custom_fwd(args...)` is deprecated. Please use `torch.amp.custom_fwd(args..., device_type='cuda')` instead.def forward(ctx, input, weight, bias=None):

/home/ubun22/.local/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:67: FutureWarning: `torch.cuda.amp.custom_bwd(args...)` is deprecated. Please use `torch.amp.custom_bwd(args..., device_type='cuda')` instead.def backward(ctx, grad_output):

/home/ubun22/.local/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:49: FutureWarning: `torch.cuda.amp.custom_fwd(args...)` is deprecated. Please use `torch.amp.custom_fwd(args..., device_type='cuda')` instead.def forward(ctx, input, weight, bias=None):

/home/ubun22/.local/lib/python3.10/site-packages/deepspeed/runtime/zero/linear.py:67: FutureWarning: `torch.cuda.amp.custom_bwd(args...)` is deprecated. Please use `torch.amp.custom_bwd(args..., device_type='cuda')` instead.def backward(ctx, grad_output):

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

INFO 06-01 01:25:08 [__init__.py:243] Automatically detected platform cuda.

[2025-06-01 01:25:10] Attention backend not set. Use flashinfer backend by default.

[2025-06-01 01:25:10] Init torch distributed begin.

[2025-06-01 01:25:10] Init torch distributed ends. mem usage=0.00 GB

[2025-06-01 01:25:10] init_expert_location from trivial

^[[A[2025-06-01 01:25:14] Ignore import error when loading sglang.srt.models.deepseek_janus_pro. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.gemma3_causal. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.gemma3_mm. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.internvl. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.kimi_vl. cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.kimi_vl_moonvit. cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.llava. Failed to import transformers.models.clip.modeling_clip because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.llavavid. Failed to import transformers.models.clip.modeling_clip because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.minicpmo. Failed to import transformers.models.llama.modeling_llama because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.mistral. cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.mllama. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:15] Ignore import error when loading sglang.srt.models.mllama4. Failed to import transformers.models.llama4.modeling_llama4 because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:16] Ignore import error when loading sglang.srt.models.pixtral. Failed to import transformers.modeling_utils because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:16] Ignore import error when loading sglang.srt.models.qwen2_5_vl. cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:16] Ignore import error when loading sglang.srt.models.yivl. Failed to import transformers.models.clip.modeling_clip because of the following error (look up to see its traceback):

cannot import name 'log' from 'torch.distributed.elastic.agent.server.api' (/home/ubun22/.local/lib/python3.10/site-packages/torch/distributed/elastic/agent/server/api.py)

[2025-06-01 01:25:16] Load weight begin. avail mem=6.92 GB

INFO 06-01 01:25:16 [__init__.py:243] Automatically detected platform cuda.

Loading safetensors checkpoint shards: 0% Completed | 0/1 [00:00<?, ?it/s]

Loading safetensors checkpoint shards: 100% Completed | 1/1 [03:19<00:00, 199.58s/it]

Loading safetensors checkpoint shards: 100% Completed | 1/1 [03:19<00:00, 199.58s/it]INFO 06-01 01:28:36 [__init__.py:243] Automatically detected platform cuda.

[2025-06-01 01:28:36] Load weight end. type=Qwen2ForCausalLM, dtype=torch.bfloat16, avail mem=3.41 GB, mem usage=3.51 GB.

[2025-06-01 01:28:37] KV Cache is allocated. #tokens: 96589, K size: 1.29 GB, V size: 1.29 GB

[2025-06-01 01:28:37] Memory pool end. avail mem=0.00 GB

2025-06-01 01:28:37,689 - INFO - flashinfer.jit: Prebuilt kernels not found, using JIT backend

[2025-06-01 01:28:38] max_total_num_tokens=96589, chunked_prefill_size=2048, max_prefill_tokens=16384, max_running_requests=2049, context_len=131072

[2025-06-01 01:28:39] INFO: Started server process [26241]

[2025-06-01 01:28:39] INFO: Waiting for application startup.

[2025-06-01 01:28:39] INFO: Application startup complete.

[2025-06-01 01:28:39] INFO: Uvicorn running on http://127.0.0.1:30000 (Press CTRL+C to quit)

[2025-06-01 01:28:40] INFO: 127.0.0.1:54268 - "GET /get_model_info HTTP/1.1" 200 OK

[2025-06-01 01:28:40] Prefill batch. #new-seq: 1, #new-token: 7, #cached-token: 0, token usage: 0.00, #running-req: 0, #queue-req: 0

2025-06-01 01:28:44,336 - INFO - flashinfer.jit: Loading JIT ops: batch_prefill_with_kv_cache_dtype_q_bf16_dtype_kv_bf16_dtype_o_bf16_dtype_idx_i32_head_dim_qk_128_head_dim_vo_128_posenc_0_use_swa_False_use_logits_cap_False_f16qk_False

![【拓扑排序】P6560 [SBCOI2020] 时光的流逝|普及+](https://i-blog.csdnimg.cn/direct/0e13f43eeb0f44a195135eee0d1801bf.png)