介绍

以下搭建的环境使用的是VMware虚拟机。这里大家可以自行选择。K8S每个版本可能都会有差异,请保证相关组件的兼容性。

准备工具

部署版本介绍

| 名称 | 版本 | 描述 |

| centos | 7.9 | |

| docker | 20.10.8 | |

| k8s | v1.20.10 | |

| calico | v3.20.0 |

K8S节点规划

3个master,两个worker节点

| 机器 | IP(示例) | 描述 |

| master01 | 10.17.0.119 | K8S主节点 |

| master02 | 10.17.0.120 | K8S主节点 |

| master03 | 10.17.0.121 | K8S主节点 |

| worker01 | 10.17.0.122 | 工作节点 |

| worker02 | 10.17.0.123 | 工作节点 |

CentOS镜像下载

-

官方网站

https://www.centos.org/download/

-

清华大学镜像网站下载

https://mirrors.tuna.tsinghua.edu.cn/centos/

接下来进入到centos系统操作

添加阿里云源

备份一下原数据

备份 /etc/yum.repos.d/ 内的文件(CentOS 7 及之前为 CentOS-Base.repo,CentOS 8 为

CentOS-Linux-*.repo)

cp /etc/yum.repos.d/*.repo /etc/yum.repos.d/*.repo.bak添加阿里云源(可参照下方内容)

vi /etc/yum.repos.d/CentOS-Base.repo#

# The mirror system uses the connecting IP address of the client and the

# update status of each mirror to pick mirrors that are updated to and

# geographically close to the client. You should use this for CentOS updates

# unless you are manually picking other mirrors.

#

# If the mirrorlist= does not work for you, as a fall back you can try the

# remarked out baseurl= line instead.

#

#[base]

name=CentOS-$releasever - Base - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7#released updates

[updates]

name=CentOS-$releasever - Updates - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7#additional packages that may be useful

[extras]

name=CentOS-$releasever - Extras - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7#additional packages that extend functionality of existing packages

[centosplus]

name=CentOS-$releasever - Plus - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/http://mirrors.cloud.aliyuncs.com/centos/$releasever/centosplus/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7#contrib - packages by Centos Users

[contrib]

name=CentOS-$releasever - Contrib - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/http://mirrors.cloud.aliyuncs.com/centos/$releasever/contrib/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

更新一下yum

yum update同步服务器时间

安装chrony

yum -y install chrony添加国内NTP服务器

echo "server ntpl.aliyun.com iburst">> /etc/chrony.conf

echo "server ntp2,aliyun.com iburst">> /etc/chrony.conf

echo "server ntp3.aliyun.com iburst" >> /etc/chrony.conf

echo "server ntp,tuna.tsinghua.edu.cn iburst">> /etc/chrony.conftail -n 4 /etc/chrony.confsystemctl restart chronyd设置定时任务,每分钟重启chrony服务,进行时间校对

echo "* * * * * /user/bin/systemctl restart chronyd" | tee -a /var/spool/cron/rootK8S环境准备

每个机器配置一下host,方便访问

cat >> /etc/hosts <<OFF

10.17.0.119 master01

10.17.0.120 master02

10.17.0.121 master03

10.17.0.122 worker01

10.17.0.123 worker02

OFF关闭交换分区SWAP

如果不关闭,默认配置的 kubelet 将无法启动

#仅仅在当前环境关闭,重启后恢复。

swapoff -a#关闭swap放到启动文件中,下次启动自动关闭sed -i 's/.*swap.*/#&/' /etc/fstab关闭防火墙

因为K8S涉及很多的端口要启动,需要去官网查哪些端口要开启,如果不熟悉的话,不容易处理。所以这里直接关掉防火墙。假如了解K8S用到的端口,可以选择性开放也行。

#禁用 SELINUX

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config#关闭并设置开机关闭查看服务状态

systemctl stop firewalld.service

systemctl disable firewalld.service

systemctl status firewalld.service配置ip转发

通常主机不转发ip包,这里设置ipv4和ipv6进行转发

#创建/etc/sysctl.d/k8s.conf 文件,添加如下内容:

cat >>/etc/sysctl.d/k8s.conf<< OFF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

OFF#执行如下命令使修改生效:

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf加载ipvs模块

这个是K8S在做负载均衡会用到的模块

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

lsmod | grep ip_vs

lsmod | grep nf_conntrack_ipv4如果需要涉及看K8S负载均衡链路,ipvsadmin和ipset会用到

#安装ipvs管理模块

yum install -y ipvsadm ipset安装Docker

安装必要的一些系统工具

yum install -y yum-utils device-mapper-persistent-data lvm2设置stable镜像仓库aliyun

如果网络问题,设置失败,可以单独下载后安装。

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo更新yum软件包索引

yum makecache fast查看Docker版本列表

#从高到低列出 Docker-ce 的版本

yum list docker-ce.x86_64 --showduplicates |sort -r 安装Docker

如果不指定版本号则默认安装最新的,其中containerd.io安装的目的是让K8S能使用Docker作为虚拟化容器。高版本K8S已经不把Docker作为默认容器了。所以安装的时候需要把它装上

yum install docker-ce-20.10.8 docker-ce-cli-20.10.8 containerd.io-1.4.10 -y –-allowerasing如果是Centos8,需要卸载Podman

yum remove podman -y启动Docker,设置开机启动

systemctl start docker

systemctl enable docker --now查看docker信息

docker info设置镜像加速

vi /etc/docker/daemon.json{"registry-mirrors":["https://2lqq34jg.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"],"live-restore": true

}

重启Docker

systemctl daemon-reloadsystemctl restart dockerKubeadm, Kubelet, Kubectl 安装 (master and node)

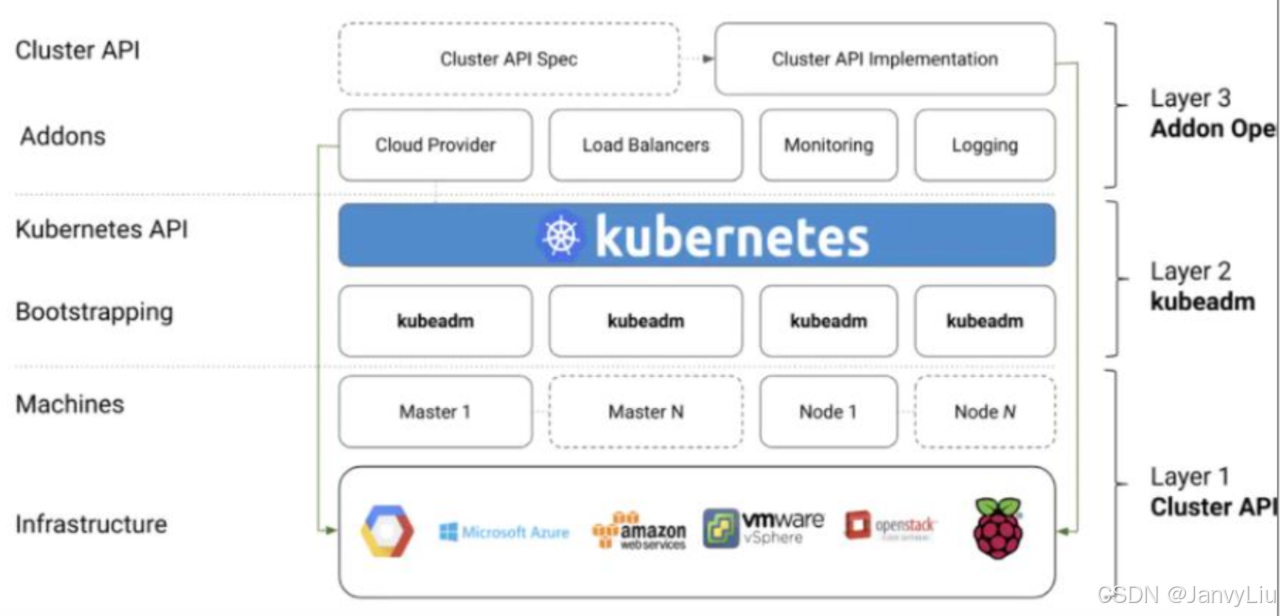

kubeadm 是 kubernetes 的集群安装工具,能够快速安装 kubernetes 集群。能完成下面的拓扑安装

-

单节点 k8s (1+0)

-

单 master 和多 node 的 k8s 系统(1+n)

-

Mater HA 和多 node 的 k8s 系统(m*1+n)

kubeadm 在整个 K8S 架构里的位置

添加软件源信息

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF#更新缓存

yum makecache

查看kubeadm版本信息

yum list kubeadm --showduplicates |sort -r安装kubeadm

yum install kubeadm-1.20.10 kubectl-1.20.10 kubelet-1.20.10 -y查看版本

kubeadm version

kubectl version启动kubelet,设置开机启动

注意这里kubelet的状态还是不可用的,初始化集群时,会变为可用。

systemctl daemon-reload

systemctl enable --now kubelet

systemctl start kubelet.service

systemctl enable kubelet.service

systemctl status kubelet集群安装

提前拉取镜像

这里网络环境好的,可以略过,也可以提前拉取

kubeadm config images pull --image-repository="registry.aliyuncs.com/google_containers" --kubernetes-version=v1.20.10命令行初始化集群

pod地址(可选):--pod-network-cidr=172.18.207.0/16

service地址(可选):--service-cidr=10.233.0.0/16

镜像地址(可选):--image-repository registry.aliyuncs.com/google_containers

kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.22.10 --pod-network-cidr=172.18.207.0/16 --service-cidr=10.233.0.0/16 初始化成功内容:

注意:(1)需要保存第5-7行内容,使用集群。(2)保存19-20行内容,节点执行加入集群要用到

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 10.17.0.119:6443 --token vm59km.0djj6xlhrye4u8ts \--discovery-token-ca-cert-hash sha256:bb70d2d1851de92cc946105141ede212d769a22db119ce4a6d0e62d2fbe1b637

设置集群访问方式

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config执行kubectl get pod -A正常打印。

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-59d64cd4d4-s9lwj 0/1 Pending 0 6m1s

kube-system coredns-59d64cd4d4-w64jk 0/1 Pending 0 6m1s

kube-system etcd-localhost.localdomain 1/1 Running 0 6m16s

kube-system kube-apiserver-localhost.localdomain 1/1 Running 0 6m16s

kube-system kube-controller-manager-localhost.localdomain 1/1 Running 0 6m16s

kube-system kube-proxy-4sc9n 1/1 Running 0 6m2s

kube-system kube-scheduler-localhost.localdomain 1/1 Running 0 6m16s获取组件信息

kubectl get cs #此时会发现controller-manager和scheduler不健康.

原因:controller-manager和scheduler禁用了非安全端口

解决:屏蔽端口禁用(屏蔽对应文件中此行代码:- --port=0),解决问题,详情参考下方解决方案。

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"} 解决方案:

- 查看文件

ll /etc/kubernetes/manifests-rw------- 1 root root 2221 Oct 27 06:24 etcd.yaml

-rw------- 1 root root 3340 Oct 27 06:24 kube-apiserver.yaml

-rw------- 1 root root 2826 Oct 27 06:24 kube-controller-manager.yaml

-rw------- 1 root root 1413 Oct 27 06:24 kube-scheduler.yaml

-

将kube-scheduler.yml、kube-controller-manager.yml中的--port=0注释(每个节点都需要修改)

-

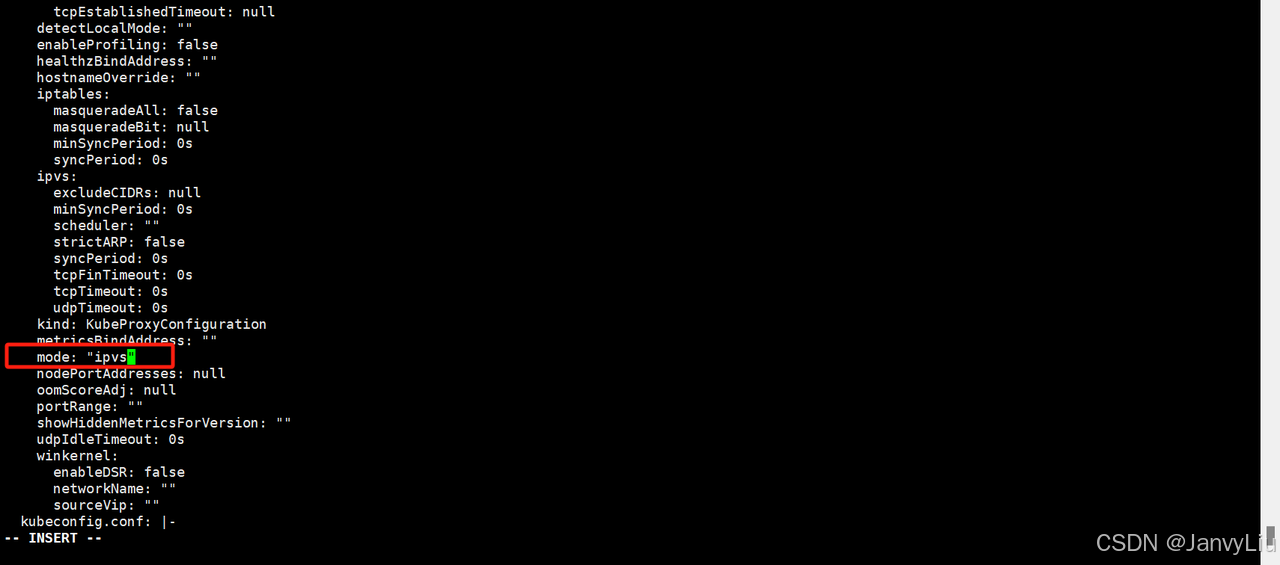

修改kube-proxy的网络模式,默认为空,需要设置为ipvs(1.12以前是iptables,后面有ipvs)

kubectl edit configmap kube-proxy -n kube-system

安装网络插件calico

获取yaml文件

wget --no-check-certificate https://projectcalico.docs.tigera.io/archive/v3.20/manifests/calico.yaml网络不好的可以用下方的资源

修改calico.yaml

# 在 - name: CLUSTER_TYPE 下方添加如下内容

- name: CLUSTER_TYPEvalue: "k8s,bgp"# 下方为新增内容

- name: NODEIP valueFrom:fieldRef:apiVersion: v1fieldPath: status.hostIP

- name: IP_AUTODETECTION_METHODvalue: can-reach=$(NODEIP)配置解释

NODEIP 环境变量:

- 通过

fieldRef引用,NODEIP被设置为当前节点的hostIP,也就是节点的实际 IP 地址。 -

status.hostIP会自动获取当前节点的主机 IP,这在多节点环境中确保每个节点使用自身的 IP 地址。

IP_AUTODETECTION_METHOD:

-

IP_AUTODETECTION_METHOD是 Calico 用来检测和选择节点 IP 地址的参数。 - 设置为

can-reach=$(NODEIP)表示 Calico 将尝试连接到该NODEIP,并根据路由条件找到合适的 IP。这种方式常用于具有多网卡或复杂路由的环境,可以保证 Calico 选择的 IP 地址在集群内部可达。

安装calico

kubectl apply -f calico.yaml其它master操作

在master02和master03创建证书存放目录

mkdir -pv /etc/kubernetes/pki/etcd && mkdir -pv ~/.kube/拷贝master01节点的证书到master02、master03

#在master01执行

scp /etc/kubernetes/pki/ca.* /etc/kubernetes/pki/sa.* /etc/kubernetes/pki/front-proxy-ca.* root@master02:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.* root@master02:/etc/kubernetes/pki/etcd/scp /etc/kubernetes/pki/ca.* /etc/kubernetes/pki/sa.* /etc/kubernetes/pki/front-proxy-ca.* root@master03:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.* root@master03:/etc/kubernetes/pki/etcd/master01生成集群token(或者初始化时保存的token)

kubeadm token create --print-join-commandmaster02、master03加入集群

kubeadm join 10.17.0.119:6443 --token eaqaum.22fdpncfy7qheke3 --discovery-token-ca-cert-hash sha256:407f37d2f640cbd48c0dcbd4868a4c49978921d79496b921f34d3fe1c092b9e6 --control-planemaster02、master03配置集群控制信息

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

配置etcd高可用

etcd默认的yaml文件–initial-cluster只指定自己,所以需要修改为指定我们的三台Master节点主机:

-

master01节点操作

sed -i 's|--initial-cluster=master01=https://10.17.0.119:2380|--initial-cluster=master01=https://10.17.0.119:2380,master02=https://10.17.0.120:2380,master03=https://10.17.0.121:2380|' /etc/kubernetes/manifests/etcd.yaml其它两个节点同样将相关的节点添加即可。

检查三个master的–initial-cluster配置项包含三个集群信息。修改完之后完整的信息如下:

-

master01节点

spec:containers:- command:- etcd- --advertise-client-urls=https://10.17.0.119:2379- --cert-file=/etc/kubernetes/pki/etcd/server.crt- --client-cert-auth=true- --data-dir=/var/lib/etcd- --initial-advertise-peer-urls=https://10.17.0.119:2380- --initial-cluster=master01=https://10.17.0.119:2380,master02=https://10.17.0.120:2380,master03=https://10.17.0.121:2380- --key-file=/etc/kubernetes/pki/etcd/server.key- --listen-client-urls=https://127.0.0.1:2379,https://10.17.0.119:2379- --listen-metrics-urls=http://127.0.0.1:2381- --listen-peer-urls=https://10.17.0.119:2380

-

master02节点:

spec:containers:- command:- etcd- --advertise-client-urls=https://10.17.0.120:2379- --cert-file=/etc/kubernetes/pki/etcd/server.crt- --client-cert-auth=true- --data-dir=/var/lib/etcd- --initial-advertise-peer-urls=https://10.17.0.120:2380- --initial-cluster=master02=https://10.17.0.120:2380,master01=https://10.17.0.119:2380,master03=https://10.17.0.121:2380- --initial-cluster-state=existing- --key-file=/etc/kubernetes/pki/etcd/server.key- --listen-client-urls=https://127.0.0.1:2379,https://10.17.0.120:2379- --listen-metrics-urls=http://127.0.0.1:2381- --listen-peer-urls=https://10.17.0.120:2380

-

master03节点

spec:containers:- command:- etcd- --advertise-client-urls=https://10.17.0.121:2379- --cert-file=/etc/kubernetes/pki/etcd/server.crt- --client-cert-auth=true- --data-dir=/var/lib/etcd- --initial-advertise-peer-urls=https://10.17.0.121:2380- --initial-cluster=master03=https://10.17.0.121:2380,master02=https://10.17.0.120:2380,master01=https://10.17.0.119:2380- --initial-cluster-state=existing- --key-file=/etc/kubernetes/pki/etcd/server.key- --listen-client-urls=https://127.0.0.1:2379,https://10.17.0.121:2379- --listen-metrics-urls=http://127.0.0.1:2381- --listen-peer-urls=https://10.17.0.121:2380

master参与工作负载

master默认不参与工作负载,初始化集群时可以去除污点,也可以初始化后按下方操作去除污点。

-

查看污点

kubectl describe node 节点|grep Taint -

去除污点

kubectl taint nodes 节点 node-role.kubernetes.io/master-worker节点加入集群

执行join脚本

ubeadm join 10.17.0.119:6443 --token vm59km.0djj6xlhrye4u8ts \--discovery-token-ca-cert-hash sha256:bb70d2d1851de92cc946105141ede212d769a22db119ce4在master节点查看节点列表

kubectl get node

![[前端计算机网络]资源加载过程的详细性能信息浏览器加载资源的全过程](https://i-blog.csdnimg.cn/direct/cb9c8f89d02e4675b79edeea332ed9ed.png)